VOL. 22, No. 1, 1-18

This study examined how intellectual openness and gender affected the extent to which students engaged in dialectic critical discourse in computer-supported collaborative argumentation (CSCA). This study found: a) indications of differences in the number of personal rebuttals posted in reply to direct challenges between more versus less open students (ES = +0.33); b) significant differences in the number of rebuttals posted between males and females (ES = +0.66 ); c) significant differences in number of personal rebuttals posted between the less open versus more open students among the males (ES = +1.32), but not among the females (ES = -0.19); and d) no difference in the types of responses posted in reply to challenges between more open versus less open students. These findings illustrate how the effects of intellectual openness can be mediated by gender, or vice versa, how openness can potentially affect student performance in CSCA, and how process-oriented strategies can be used to analyze and structure discussions to promote critical discourse.

Cette étude examine comment l'ouverture d'esprit et le genre affecte l'engagement des étudiantes et étudiants dans un discours dialectique critique d'une argumentation collaborative soutenue par ordinateur (ACSO). Il a été trouvé : a) des indications de différences dans le nombre de réfutations personnelles placées en réponse à des contestations entre des étudiants plus ouverts par rapport ˆ des étudiants moins ouverts d'esprit (ES = +0,33), b) des différences significatives entre le nombre de réfutations placées par les hommes et les femmes (ES = +0,66), c) des différences significatives dans le nombre de réfutations personnelles placées par les moins ouverts d'esprit et les plus ouverts d'esprit parmi les hommes (ES = +1.32), mais pas parmi les femmes (ES = -0,19), et d) pas de différence dans les types de réponses placées en réponse aux contestations entre les étudiants plus ouverts d'esprit et les moins ouverts d'esprit. Ces résultats illustrent comment les effets de l'ouverture d'esprit peuvent tre influencés par le genre, ou vice versa, comment l'ouverture d'esprit peut potentiellement affecter la performance étudiante en ACSO, et comment les stratégies orientées sur le processus peuvent tre utilisées pour analyser et structurer les discussions pour promouvoir le discours critique.

Collaborative argumentation is an instructional activity used to foster critical reflection and critical discussion (Johnson & Johnson, 1992) in both face-to-face (F2F) and online environments. Argumentation involves the process of building arguments to support a position, considering and weighing evidence and counter-evidence, and testing out uncertainties to extract meaning, achieve understanding, and examine complex ill-structured problems (Cho & Jonassen, 2002). This process not only plays a key role in increasing students' understanding but also in improving group decision-making (Lemus, Seibold, Flanagin & Metzger, 2004). At this time, online discussion boards are being increasingly used to engage learners in dialogue in both online and face-to-face courses in order to promote more in-depth discussions (Tallent-Runnels et al., 2006). However, studies show that the quality of online discussions is often shallow (Pena-Shaff, Martin, & Gay, 2001). As a result, a growing number of researchers are examining ways to promote critical thinking in online discussions by using computer-supported collaborative argumentation (CSCA) — a set of online environments and procedures designed to scaffold and guide students through the processes of argumentation.

In CSCA, constraints are imposed on the types of messages students can post to a discussion in order to guide other students through the processes of collaborative argumentation. For example, Jeong (2005a, 2005b) presented students with a fixed set of message categories (arguments, challenges, supporting evidence, explanations) designed to foster argumentation and debate in an asynchronous threaded discussion board. Students were required to classify and label each message by inserting a tag corresponding to a given message category in the headings of each message prior to posting each message. Similarly, Jonassen and Remidez (2005) developed a threaded discussion tool called ShadowPDforum where message constraints are built into the computer interface, thus requiring students to select and classify the function of each message before messages are posted to discussions. This approach has been implemented in a number of communication tools to facilitate collaboration and group communication. Some of these tools include Belvedere (Cho & Jonassen, 2002; Jonassen & Kwon, 2001), CSILE (Scardamalia & Bereiter, 1996), ACT (Duffy, Dueber, & Hawley, 1998; Sloffer, Dueber & Duffy, 1999), Hermes (Karacapilidis & Papadiasi, 2001), FLE3 (Leinonen, Virtanen, & Hakkarainen, 2002), AcademicTalk (McAlister, Ravenscroft & Scanlon, 2004), and NegotiationTooli (Beers, Boshuizen, & Kirschner, 2004).

Despite these efforts to promote more critical discourse, the findings in CSCA research have been mixed - with no conclusive evidence to show that CSCA improves student performance and learning (Baker & Lund, 1997). For example, message constraints (and other variations of this procedure) have been found to elicit more replies that elaborate on previous ideas, and produce greater gains in individual acquisition of knowledge (Weinberger, Ertl, Fischer, & Mandl, 2005). In another study, message constraints generated more supported claims and promoted greater knowledge of the argumentation process (Stegmann, Weinberger, Fischer, & Mandl, 2004). However, no differences were found in individual knowledge acquisition, students' ability to apply relevant information and specific domain content to arguments, and ability to converge towards a shared consensus. Furthermore, message constraints were found to inhibit collaborative argumentation Ñ producing fewer challenges per argument than argumentation without message constraints (Jeong & Juong, 2007).

Given that collaborative argumentation is both an intellectual and social activity, one possible explanation for the mixed findings is that students' personalities or dispositions to engage in argumentation have not been taken into account in previous studies. Studies show that students are reluctant to criticize the ideas of other students (Lampert, Rittenhouse, & Crumbaugh, 1996; Nussbaum, 2002). As a result, Nussbaum et al. (2004) examined the combined effects of personality traits and the use of prompts (e.g., "My argument is … ", "On the opposite side … ", "Explain why …") for supporting critical discussions online and found that when prompts were used, disagreements were expressed more often by students who were less open to ideas, less anxious, and less assertive than students who were more open to ideas, more anxious, and more assertive. Every unit increase in a group's average score on assertiveness, openness to ideas, and anxiety were found to reduce the odds of a disagreement by 13%, 13%, and 16%, respectively. Chen & Caropreso (2004) found that groups with high profile and mixed profiles (high and low) across the "Big FiveÓ personality traits (extraversion, neuroticism, agreeableness, conscientiousness, openness) produced more two-way messages (messages that solicit and invite others to reply) than low and neutral profile groups.

In addition, studies have found gender differences in dispositions to engage in argumentation and gender differences in performance in CSCA. Jeong & Davidson-Shivers (2006) found that females posted fewer rebuttals to the disagreements and challenges of females than males, and males posted more rebuttals to the challenges of females than females. This finding was consistent with findings from previous studies that show that men tend to assert opinions strongly as facts, place more value on presenting information using an expository style, are more likely to use crude language, violate online rules of conduct, and engage in more adversarial exchanges (Blum, 1999; Fahy, 2003; Herring, 1999; Savicki, Lingenfelter, & Kelley, 1996). In contrast, females tend to hedge, qualify and justify their assertions (using words like 'maybe', 'possibly', and 'perhaps'), express support of others, make apologies, and in general, manifest a more consensus-making orientation and epistolary style. Furthermore, females have been found to be slightly more agreeable and males to be slightly more assertive and open to ideas (Costa, Terracciano & McCrae, 2001).

All of the behavioral characteristics associated with gender and the personality traits described above are likely to be inter-related to one extent or another. However, intellectual openness appears to be the one trait that is most closely associated with the intellectual (rather than the social-emotional aspects) and primary function of collaborative argumentation. Given that intellectual openness measures the extent to which a student is open to new ideas, needs intellectual stimulation, carries conversations to higher levels, looks for deeper meaning in things, is open to change, and is interested in many things (International Personality Item Pool, 2001), the purpose of this study was to examine the combined effects of intellectual openness and gender in CSCA by determining to what extent they affect how and how often students directly respond back to challenges and disagreements — particularly with responses that help to generate deeper and more critical discussions (e.g., argument→challenge→no reply vs. counter-challenge vs. explain/justify). Given that no previous studies have compared the performance of students across different traits in terms of the number of times students post follow-up responses (or personal rebuttals) to direct challenges and the types of behaviors that students exhibit when faced with a direct challenge (e.g., ignore challenge and don't reply, or let others reply to challenge; counter-challenge; explain/justify; support with evidence), this study used what might best be described as a process-oriented approach to determine the effects of intellectual openness and to what extent those effects are mediated by gender.

Theoretical Framework and Assumptions

The theoretical basis for examining message-challenge-rebuttal sequences was based on three assumptions of the dialogic theory of language (Bakhtin, 1981; Koschmann, 1996):

As a result, the assumptions in this study are:

These assumptions determined the approach used in this study to examine how intellectual openness and gender affect the frequency and the types of responses posted in reply to challenges — particularly the types and sequences of responses that help to produce critical discourse and construct deeper meaning.

Research Questions

This study examined the combined effects of intellectual openness and gender on group performance in CSCA by addressing two questions:

Participants

The participants were graduate students (n = 54) from a major university in the Southeast region of the U.S., consisting of 35 females and 19 males, and ranging from 20 to 50 years in age. The participants in this study were enrolled in a 16-week online graduate introductory course on distance education during the fall 2004 term (8 females and 6 males), spring 2005 term (9 females and 5 males), fall 2005 term (11 females, 5 males), and spring 2006 term (7 females, 3 males). The four cohort groups were examined collectively to obtain a sufficient corpus of data for this study. Given that the majority of the students took the course at a distance, few if any of the students had previous opportunities to interact with one another outside of the online environment. The performance of 10 students was omitted from analysis because four students did not complete the instrument used to measure intellectual openness and six students dropped the course and did not participate in all the group discussions examined in this study.

Debate Procedures

The students participated in four weekly online team debates using asynchronous threaded discussion forums in Blackboard™, a web-based course management system. The debates were structured so that: a) student participation in the debates and other discussions throughout the course contributed to 20% of the course grade; b) for each debate, students were required to post a minimum of four messages; c) prior to each debate, students were randomly assigned to one of two teams (balanced by gender) to either support or oppose a given position; and d) students were required to vote on the team that presented the strongest arguments following each debate. The purpose of each debate was to critically examine design issues, concepts and principles in distance learning covered during the week of the debate. The students debated claims such as: "The Dick & Carey ISD model is an effective model for designing the instructional materials for this course", "Type of media does not make any significant contribution to student learning", "Given the data and needs assessment, the fictitious country of NED should not develop a distance learning system", and "Print is the preferred medium for delivering a course study guide".

Online Debate Messages and Message Labels

Students were presented a list of four message categories (see Figure 1) during the debates to encourage students to support and refute presented arguments with supporting evidence, explanations, and challenges (Jeong & Juong, 2007). Based loosely on Toulmin's (1958) model of argumentation, the response categories and their definitions were presented to students prior to each debate. Each student was required to classify each posted message by category by inserting the corresponding label into the subject headings of each message (along with a short descriptive title representing the main idea presented in the message), and restrict the content of each message to address one and only one category or function at a time. The investigator occasionally checked the message labels to determine if students were appropriately labeling their messages according to the described procedures. Students were instructed to return to a message to correct errors in their labels. No participation points were awarded for a given debate if a student failed to follow these procedures.

Figure 1. Example instructions on labeling messages in the online debates.

Label |

Description of Label |

Example Message by Label |

+ |

Identifies a message posted by a student assigned to the team supporting the given claim/statement |

-- |

- |

Identifies a message posted by a student assigned to the team opposing the given claim/statement |

-- |

ARG# |

Identifies a message that presents one and only one argument or reason for using or not using chats instead of threaded discussion forums). Number each posted argument by counting the number of arguments already presented by your team. Sub-arguments need not be numbered. ARG = "argument". |

-ARG1 One's choice of media makes very little difference in students' learning because the primary factor that determines level of learning is one's choice of instructional method. |

EXPL |

Identifies a reply/message that provides additional support, explanation, clarification, elaboration of an argument or challenge. |

-EXPL As a result, media are merely vehicles that deliver instruction but do not influence student achievement any more than the truck that delivers our groceries causes changes in our nutrition. |

BUT |

Identifies a reply/message that questions or challenges the merits, logic, relevancy, validity, accuracy or plausibility of a presented argument (ARG) or challenge BUT). |

+BUT However, one's choice of media can affect or determine which instructional methods are or are not used. If that is the case, then choice of media can make a significant difference. |

EVID |

Identifies a reply/message that provides proof or evidence to establish the validity of an argument or challenge. |

-EVID Media studies, regardless of the media employed, tend to result in "no significant different" conclusions (Mielke, 1968). |

Figure 2. Example debate with labeled messages in a Blackboard™

threaded discussion forum.

| SUPPORT statement because... | Instructor | Sat Oct 2, 2004 11:18 am |

| . +ARG1 MediaIsButAMere… | Student name | Mon Oct 4, 2004 8:47 pm |

| . . -EVID MediaIsButAMere… | Student name | Tue Oct 5, 2004 7:09 pm |

| . . . +BUT RelativityTheory… | Student name | Tue Oct 5, 2004 9:43 pm |

| . . . . -BUT Relativity… | Student name | Sat Oct 9, 2004 10:12 am |

| . . -BUT Whataboutemotions? | Student name | Tue Oct 5, 2004 9:53 pm |

| . . +EVID DistEdEffectiveAsF2F | Student name | Tue Oct 5, 2004 10:40 pm |

| . . -BUT Mediaamerevehicle | Student name | Oct 6, 2004 8:19 pm |

| . . +EVID MooreConcurs | Student name | Wed Oct 6, 2004 10:07 pm |

| . . . +EXPL MediaSelection… | Student name | Sun Oct 10, 2004 12:35 am |

| . . . -BUT WellChosen… | Student name | Oct 10, 2004 4:31 pm |

| . . . . +BUT Supporting… | Student name | Sun Oct 10, 2004 5:37 pm |

| . . -BUT Mediaismorethe… | Student name | Fri Oct 8, 2004 5:30 pm |

| . . . +BUT Supporting… | Student name | Sat Oct 9, 2004 8:51 am |

| . . -BUT LearningNotSimply… | Student name | Mon Oct 11, 2004 9:54 am |

| . +ARG2 Standards for… | Student name | Wed Oct 6, 2004 1:48 pm |

| . . +BUT Clarification? | Student name | Sun Oct 10, 2004 5:39 pm |

| . +ARG3 MediaUnrelatedto… | Student name | Wed Oct 6, 2004 3:12 pm |

| . . -BUT MediaUnrelatedto… | Student name | Wed Oct 6, 2004 8:26 pm |

| . . . +BUT MediaSelection | Student name | Thu Oct 7, 2004 9:20 am |

| . . . . -BUT MediaSelection | Student name | Sun Oct 10, 2004 11:21 am |

| . . +EVID MethodNotMedia | Student name | Wed Oct 6, 2004 11:04 pm |

| . . -BUT MediaUnrelatedto… | Student name | Sat Oct 9, 2004 10:59 am |

Note: The names of students have been removed to protect students' confidentiality. The discussion thread for posting arguments to oppose the given statement ("OPPOSE statement because …") is out of view in the above illustration.

Students were also instructed to identify each message by team membership by adding "-" for opposing or "+" for supporting team at the end of each label (e.g., +ARG, -ARG). These tags enabled students to easily locate the exchanges between the opposing and supporting teams during the debates (e.g., +ARG → -BUT) and respond to the exchanges to advance their team's position. In Figure 2 is an example of how the labeled messages appeared in the discussion board. One discussion thread was designated for posting supporting arguments, and a second but separate thread (not shown in Figure 2) was designated for posting opposing arguments. Figure 3 provides an excerpt from one of the debates to illustrate some of the messages that were coded for each message category.

Personality Trait Instruments

Students were measured on intellectual openness using a ten-item instrument (see Table 1) from the International Personality Item Pool (2001) with a reliability coefficient of .82. To measure the level of intellectual openness, students were asked to rate to what extent they agreed (on a scale of one to five) with statements like "open to new ideas", "need intellectual stimulation", "carry the conversation to a higher level", "look for a deeper meaning in things", "am open to change", and "am interested in many things". The scores across all ten items were added to compute a total score for each student. The median score among all students in the study was used to determine which students were classified as low and high on intellectual openness. The mean score for intellectual openness was 9.3 (SD = 5.41, n = 54) with a minimum score of -6 and maximum score of 20. The differences in scores between the male (M = 10.68, SD = 4.69, n = 19) and female students (M = 8.54, SD = 5.69, n = 35) were not statistically significant, t(52) = 1.40, p = .167.

Table 1

Ten-item scale used to measure intellectual openness

| Am interested in many things | +1 |

| Am open to change | +1 |

| Carry the conversation to a higher level | +1 |

| Prefer variety to routine | +1 |

| Want to increase my knowledge | +1 |

| Am not interested in abstract ideas | -1 |

| Am not interested in theoretical discussions | -1 |

| Prefer to stick with things that I know | -1 |

| Rarely look for a deeper meaning in things | -1 |

| Try to avoid complex people | -1 |

The Data Set

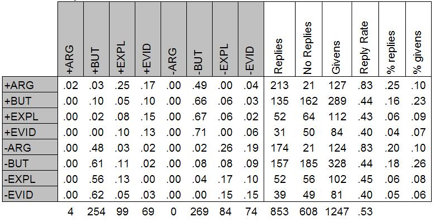

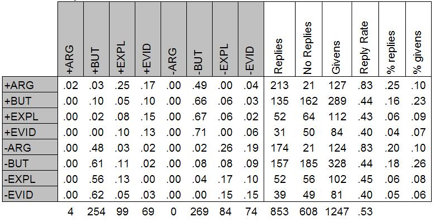

ForumManager (Jeong, 2005c) was used to download the student-labeled messages from the Blackboardª discussion forums into Microsoft Excel while maintaining the hierarchical threaded format in order to preserve the information needed to determine which responses were posted in reply to which messages. The Discussion Analysis Tool or DAT (Jeong, 2005b & 2005d), was then used to automatically extract the codes assigned to each message from the subject headings to tag each message an argument (ARG), evidence (EVID), challenge (BUT), or explanation (EXPL). The data analyzed in this study (see Figure 4) consisted of 1247 messages (not including the 51 instructor's messages/prompts and 14 messages that were not assigned a label by students). DAT was then used to tally the number of specific types of responses elicited by each specific type of message (e.g., number of challenges posted in response to each observed argument) to generate the raw scores needed to test the effects of intellectual openness.

Figure 3. Example of a coded thread generated by an argument posted in opposition to the claim "Media makes very little or no significant contributions to learning".

| Category | Message Text |

| ARG | Borje Holmberg's Theory of Interaction and Communication states that "learning pleasure supports student motivation" and and "strong student motivation facilitates learning"(Simonson, p. 43). I would argue that compelling media and multi-media increases learning pleasure and thus facilitates student learning - Bob |

| +BUT |

|

| +EVID |

|

| BUT |

|

| -EXPL |

|

| -EVID |

|

ARG = argument, BUT = challenge, EVID = supporting evidence, EXPL = explanation

To determine to the extent students were able to correctly assign labels to each message posted to the debates, one debate from each course was randomly selected and coded by the investigator to test for errors in the labels. Overall percent agreement was .91 based on the codes of 158 messages, consisting of 42 arguments, 17 supporting evidence, 81 challenges, and 17 explanations. The Cohen Kappa coefficient, which accounts for chance in coding errors based on the number of categories in the coding scheme, was .86 — indicating excellent inter-rater reliability (Bakeman & Gottman, 1997, p. 66).

Figure 4. Interaction data produced by DAT software

Example: 48% of replies to opposing arguments (-ARG) were challenges (+BUT).

Observed in the discussions were a total of 657 instances where messages elicited one or more challenges. In 514 (78%) of these 657 instances, the individual student receiving the direct challenge did not post a personal rebuttal to the challenge. In the remaining 143 (22%) of the 657 instances, a personal rebuttal was posted in reply to the challenge. Of these 143 instances where students posted follow-up responses to challenges, 112 were counter-challenges, 29 were explanations, and 4 were supporting evidence. No outliers were found (beyond 3 standard deviations) in the number of personal rebuttals posted by the 54 students observed in this study.

Frequency of Personal Rebuttals

The results of a 2 (low vs. high openness) x 2 (male vs. female) univariate analysis of variance revealed no significant difference in the number of personal rebuttals between more open versus less intellectually open students, F(1, 50) = 3.55, p = .065. However, the observed difference did approach statistical significance. The more open students posted 45% more personal rebuttals (M = 2.62, SD = 2.61, n = 29) than the less open students (M = 1.80, SD = 2.40, n = 25), with effect size of +0.33. At the same time, the results of post-hoc tests showed that the more open students left 33% more challenges without rebuttals (M = 10.76, SD = 5.59, n = 29) than the less open students (M = 8.08, SD = 5.61, n = 25), t(52) = -1.75, p = .086, effect size of -0.48.

A significant difference was found in the number of personal rebuttals posted by male versus female students, F(1, 50) = 4.66, p = .036. Males posted nearly twice the number of personal rebuttals (M = 3.31, SD = 2.73, n = 19) than females (M = 1.66, SD = 2.23, n = 35), with effect size of +0.66.

The interaction between openness and gender was also found to be significant, F(1, 50) = 6.50, p = .014. This finding shows that the effects of intellectual openness were substantially influenced by gender. Among the males, students who were more intellectually open posted 1.8 times more personal rebuttals (M = 4.54, SD = 2.94, n = 11) than those who were less intellectually open (M = 1.62, SD = 1.06, n = 8), with effect size of +1.32. This finding, however, must be interpreted with caution given the small number of males observed in this study. Among the females, students who were more intellectually open posted about 23% fewer (not more) personal rebuttals (M = 1.44, SD = 1.50, n = 18) than students who were less intellectually open (M = 1.88, SD = 2.85, n = 17), with an effect size of -0.19.

Types of Responses to Challenges

The number of times no personal rebuttals were posted in reply to a challenge and the number of times challenges, explanations, and responses with supporting evidence was posted in reply to challenges was counted for each individual student. To control for variance in the observed counts across individual students, the actual counts for each response type for each individual were translated into relative frequencies for each individual (e.g., 3, 5, 1, 1 was translated to .30, .50, .10, 10). The relative frequencies were then multiplied by a value of 10. The adjusted frequencies were then totaled for each response category across all students who were less intellectually open, and then totaled again for all students who were more intellectually open to produce the following numbers: 25 BUT, 8 EXPL, 1 EVID, and 197 No reply (or .10 BUT, .03 EXPL, .01 EVID, .86 No reply) produced by the less open students, and 49 BUT, 8 EXPL, 2 EVID, and 231 No reply (or .16 BUT, .03 EXPL, .01 EVID, and .80 No reply) produced by the more open students. Using the Chi-Square test of independence, this study found no significant differences in the way students responded to direct challenges between students who were more open versus less intellectually open, c2 (3) = 4.74, p = .19.

|

|

Between less open students |

Between more open students |

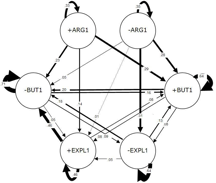

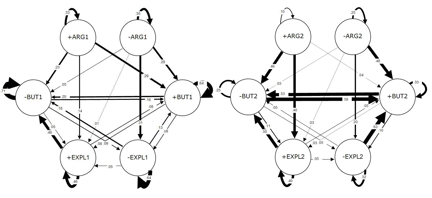

Figure 5. Transitional state diagrams of response patterns in exchanges

produced by students within group.

Post-hoc tests used to determine the effects of gender on response patterns could not be conducted due to an insufficient number of male participants (n = 6) that were both low in intellectual openness and received at least one opportunity to reply to a direct challenge with a personal rebuttal. However, Figure 5 reveals that within the exchanges between the more open students only, the students were more likely to respond to arguments with challenges and respond to challenges with counter-challenges than in the exchanges between less open students only. This finding (although speculative in nature) suggests potential differences in performance between groups that consist only of students who are more open and students who are less open.

The purpose of this study was to examine how intellectual openness and gender affected the way students responded to direct challenges and to what extent their responses exhibited critical discourse in CSCA. This study found no difference in the number of personal rebuttals posted in response to direct challenges between the more versus less intellectually open students. However, this study found that the males posted nearly twice the number of personal rebuttals than the females. This study also found that the effect of intellectual openness depended on the gender of the student posting rebuttals to direct challenges. The more open male students posted nearly twice the number of personal rebuttals than the less open male students Ñ a difference that was found to be large and substantial. In contrast, the more open female students were found to have posted fewer personal rebuttals than the less open female students. Finally, no differences were found in the way students responded to direct challenges between students with low versus high intellectual openness. More intellectually open students, for example, were just as likely to react to a direct challenge by posting a counter-challenge, explanation, supporting evidence, or not posting a follow-up response, as the less intellectually open students.

Given that no significant differences were found in the number of rebuttals posted by more versus less intellectual open students, this finding was not consistent with the findings of Nussbaum et al (2004) who had found that disagreements were expressed more often by students who were less intellectually open. However, this study did find significant differences between genders — a finding that is consistent with the findings reported in a previous study (Jeong & Davidson-Shivers, 2006) that showed male students posted more rebuttals in reply to challenges than female students. But more importantly, this study found that gender played a significant role in mediating the effects of intellectual openness. Level of intellectual openness was found to have a large and substantial effect on the number of times students posted personal rebuttals, but only for the male students and not for the female students. As a result, these findings suggest that measuring intellectual openness may be useful only when implemented with male participants, but not with female participants.

One possible explanation as to why intellectual openness influenced the performance of males but not females may lie in the specific items used in the scale to measure openness. For example, items like "open to new ideas" and "open to change" could theoretically serve more as a measure of students' agreeableness and willingness to accept opposing viewpoints without question and further discussion - a trait that is observed more often among females, not males (Costa, Terracciano & McCrae, 2001; Jeong & Davidson-Shivers, 2006). In contrast, the trait "carry conversations to a higher level" seems to be a sub-trait that has a more direct association with how and how often students responded to challenges. At the same time, there is the possibility that some of the sub-traits in the scale may be more dominant (e.g., "carry conversations to a higher level") or less dominant (e.g., "open to new ideas" and "open to change") among males, and vice versa for female students. As a result, the ten-item scale used in this study may need to be refined (or even simplified) before it can be used to accurately gauge and predict the performance of both males and females participants in CSCA.

Nevertheless, the findings in this study provide some answers and guidelines as to why, when, and how to use measures of intellectual openness (or other personality traits) and gender to predict how students will perform in CSCA and to identify which students need direct interventions to promote more critical discourse. Specifically, the findings suggest that intellectual openness can serve as a useful criterion for assigning male students to mixed-gender or all-male groups in order to maximize exchanges between students that result in deeper inquiry and the construction of deeper meaning and understanding. Although the 10-item scale used to measure intellectual openness is very short and not necessarily difficult to implement, what is still needed at this time is an online tool that can automate the task of surveying students for one or more instructor-selected traits, assigning students across a given number of groups using random stratification to balance groups across selected traits, and notifying students of their group assignments. Ultimately, the findings and process-oriented methods described in this study can potentially be used to formulate pedagogical rules and stochastic models that can be implemented in future CSCA systems — systems that might one day provide the tools to automate the tasks of assigning students to groups based on observed traits, diagnosing student performance, and delivering interventions to optimize group performance.

However, the findings in this study are not conclusive due to a number of limitations in the scope and design of the study. Future studies will need to examine: a) a larger sample to compare performance between male and female students who score in the lower versus upper quartiles; b) the effects across different task structures other than those used in this study to encourage argumentation (e.g., message constraints, assigning students to opposing teams, requiring minimum number of postings); c), smaller discussion groups where larger deviations in trait scores are more likely to affect group performance; d) the effects across a wider range of exchanges (e.g., challenge→concede, challenge→derogatory remark) that mark both constructive and non-constructive interactions; e) differences in performance produced by other traits more closely associated with the intellectual dimensions of argumentation such as active versus reflective learning styles (work in progress); and f) how the traits of both the messenger and challenger affect the frequency of rebuttals posted by the messenger.

Overall, this study provides insights into how differences in personality traits and gender can affect group performance in CSCA. Specifically, this study was successful in what was an initial attempt to determine if, when, and how intellectual openness and gender together affect the specific processes and message-response exchanges that define and promote critical thinking in CSCA. The methods and software tools used in this study to measure the combined effects of intellectual openness and gender present a unique and potentially useful approach to developing and empirically testing instructional interventions that use information on learner characteristics to predict, diagnose, intervene, and optimize group performance in computer-supported collaborative learning, work, decision-making, and problem-solving.

Bakeman, R., & Gottman, J. (1997). Observing Interaction: An introduction to sequential analysis. Cambridge, University Press.

Baker, M. & Lund, K. (1997). Promoting reflective interactions in a CSCL environment. Journal of Computer Assisted Learning, 13, 175-193.

Baker, M. (1999). Argumentation and constructive interaction. In P. Courier & J. E. B. Andriessen (Eds.). Foundations of argumentative text processing (pp. 179-202) Amsterdam: Amsterdam University Press.

Bakhtin, M. (1981). Dialogic imagination. Ed. Michael Holquist. Trans. Caryl Emerson and Michael Holquist. Austin: University of Texas Press.

Beers, P. J., Boshuizen, E., & Kirschner, P. (2004). Computer support for knowledge construction in collaborative learning environments. Paper presented at the American Educational Research Association Conference. San Diego, CA.

Blum, K. (1999). Gender differences in asynchronous learning in higher education: Learning styles, participation barriers and communication patterns. Journal of Asynchronous Learning, 3(1). Available from http://www.sloan-c.org/publications/jaln/v3n1/index.asp, last accessed 3, March 2004.

Chen, S.-J., & Caropreso, Edward. (2004). Influence of personality on online discussion. Journal of Online Interactive Learning, 3(2).

Cho, K., & Jonassen, D. (2002). The effects of argumentation scaffolds on argumentation and problem solving. Educational Technology Research and Development, 50(3), 5-22.

Costa, P., Terracciano, A. & McCrae, R. (2001). Gender differences in personality traits across cultures: Robust and surprising findings. Journal of Personality and Social Psychology, 81(2), 322-331.

Duffy, T. M., Dueber, B., & Hawley, C. L. (1998). Critical thinking in a distributed environment: A pedagogical base for the design of conferencing systems. In C. J. Bonk, & K. S. King (Eds.), Electronic collaborators: Learner-centered technologies for literacy, apprenticeship, and discourse (pp. 51-78) Mahwah, NJ: Erlbaum.

Fahy, P. (2003). Indicators of support in online interaction. International Review of Research in Open and Distance Learning, 4(1). Available from http://www.irrodl.org/content/v4.1/fahy.html, last accessed 21 April 2004.

Herring, S. (1999). The rhetorical dynamics of gender harassment online. The Information Society, 15(3), 151-167. Special issue on the Rhetorics of Gender in Computer-Mediated Communication, edited by L.J. Gurak.

International Personality Item Pool (2001). A scientific collaboratory for the development of advanced measures of personality traits and other individual differences. Available from http://ipip.ori.org, last accessed 20 March 2006.

Jeong, A., & Juong, S. (2007). The effects of response constraints and message labels on group interaction and argumentation in online discussions. Computers and Education, 48, 427-445.

Jeong, A. (2003). Sequential analysis of group interaction and critical thinking in online threaded discussions. The American Journal of Distance Education, 17(1), 25-43.

Jeong, A. (2005a). The effects of linguistic qualifiers on group interaction patterns in computer-supported collaborative argumentation. International Review of Research in Open and Distance Learning, 6(3) Available from http://www.irrodl.org/content/v6.3/jeong.html, last accessed 20 March, 2006.

Jeong, A. (2005b). A guide to analyzing message-response sequences and group interaction patterns in computer-mediated communication. Distance Education, 26(3), 367-383.

Jeong, A. (2005c). ForumManager. Available from http://bbproject.tripod.com/ForumManager, last accessed 30 January 2007.

Jeong, A. (2005d). Discussion analysis tool. Available from http://garnet.fsu.edu/~ajeong/DAT, last accessed 30 January 2007.

Jeong, A., & Davidson-Shivers, G. (2006). The effects of gender interaction patterns on student participation in computer-supported collaborative argumentation. Educational Technology, Development and Research, 54(6), 543-568.

Johnson, D. & Johnson, R. (1992). Creative controversy: Intellectual challenge in the classroom. Edina, MN: Interaction Book Company.

Jonassen, D. H., & Kwon, H. I. (2001). Communications patterns in computer mediated versus face-to-face group problem solving. Educational Technology Research & Development, 49(1), 35-51.

Jonassen, D., & Remidez, H. (2005). Mapping alternative discourse structures onto computer conferences. International Journal of Knowledge Learning 1(1), 113-129.

Karacapilidis, N., & Papadias, D. (2001). Computer supported argumentation and collaborative decision making: The Hermes system. In Proceedings of the Computer Support for Collaborative Learning (CSCL) 2001 Conference.

Koschmann, T. (1996). Paradigm shifts and instructional technology. In T. Koschmann (Ed.) CSCL: Theory and practice of an emerging paradigm. Mahwah, NJ: Lawrence Erlbaum, 1-23.

Lampert, M. L., Rittenhouse, P., & Crumbaugh, C. (1996). Agreeing to disagree: Developing sociable mathematical discourse. In D. R. Olson & N. Torrance (Eds.), Handbook of human development in education. Cambridge, MA, Blackwell, 731-764.

Leinonen, T., Virtanen, O. Hakkarainen, K. (2002). Collaborative discovering of key ideas in knowledge building. In Proceedings of the Computer Support for Collaborative Learning 2002 Conference. Boulder, CO. Available from http://fle3.uiah.fi, last accessed 20 March 2006.

Lemus, D., Seibold, D., Flanagin, A., & Metzger, M. (2004). Argument and decision making in computer-mediated groups. Journal of Communication, 54(2), 302-320.

McAlister, S., Ravenscroft, A., and Scanlon, E. (2004). Combining interaction and context design to support collaborative argumentation in education. Journal of Computers Assisted Learning, 20(3), 194-204.

Nussbaum, E. M. (2002). How introverts versus extroverts approach small-group argumentative discussions? The Elementary School Journal, 102, 183-197.

Nussbaum, E. M., Hartley, K., Sinatra, G.M., Reynolds, R.E., & Bendixen, L.D. (2004). Personality interactions and scaffolding in on-line discussions. Journal of Educational Computing Research, 30(1 & 2), 113-137.

Pena-Shaff, J., Martin, W., & Gay, G. (2001). An epistemological framework for analyzing student interactions in computer mediated communication environments. Journal of Interaction Learning Research, 12, 41-68.

Savicki, V., Lingenfelter, D., & Kelley, M. (1996). Gender language style and group composition in Internet discussion groups. Journal of Computer-Mediated Communication, 2(3). Available from http://www.ascusc.org/jcmc/vol2/issue3/savicki.html, last accessed 30 March 2006.

Scardamalia, M., & Bereiter, C. (1996). Computer support for knowledge-building communities. In T. Koschmann (Ed.), CSCL: Theory and practice of an emerging paradigm, 249-268. Mahwah, NJ: Erlbaum.

Sloffer, S., Dueber, B., & Duffy, T. (1999). Using asynchronous conferencing to promote critical thinking: Two implementations in higher education. In the Proceedings of the 32nd Hawaii International Conference on System Sciences. Maui, Hawaii, January 1999.

Stegmann, K., Weinberger, A., Fischer, F., & Mandl, H. (2004). Scripting argumentative knowledge construction in computer-supported learning environments. In P. Gerjets & P. A. Kirschner & J. Elen & R. Joiner (Eds.), Instructional design for effective and enjoyable computer-supported learning.

Tallent-Runnels, M., Thomas, J., Lan, W. & Cooper, S. (2006). Teaching courses online: A review of the research. Review of Educational Research, 76(1), 93-135.

Toulmin, S.E. (1958). The uses of argument. Cambridge: University Press.

Weinberger, A., Ertl, B., Fischer, F. & Mandl, H. (2005). Epistemic and social scripts in computer-supported collaborative learning. Instructional Science, 33, 1-30.

Wiley, J., & Voss, J. (1999). Constructing arguments from multiple sources: Tasks that promote understanding and not just memory for text. Journal of Educational Psychology, 91, 301-311.

Dr. Jeong is a Professor of Instructional Systems at Florida State University. He teaches online courses in Distance Learning and develops methods and software tools to build stochastic models that explicate how characteristics of the message and messenger affect dialog move sequences in ways that improve group performance in computer-supported collaborative environments.