VOL. 24, No. 1, 143 - 166

The E-Learning Maturity Model (eMM) is a quality improvement framework designed to help institutional leaders assess their institution's e-learning maturity. This paper reviews the eMM, drawing on examples of assessments conducted in New Zealand, Australia, the UK and the USA to show how it helps institutional leaders assess and compare their institution's capability to sustainably develop, deploy and support e-learning.

Le modèle de maturité pour l’apprentissage en ligne (E-Learning Maturity Model (eMM)) est un cadre de référence sur l’amélioration de la qualité qui est conçu pour aider les leaders institutionnels à évaluer la maturité de leur établissement en lien avec l’apprentissage en ligne. Cet article passe en revue le eMM, en tirant des exemples d’évaluations qui ont été menées en Nouvelle-Zélande, en Australie, au Royaume-Uni et aux États-Unis afin de montrer comment cela aide les leaders institutionnels à évaluer et à comparer la capacité de leur établissement à développer de façon durable, à déployer et à soutenir l’apprentissage en ligne.

Investment in e-learning by educational institutions has grown rapidly, driven at least in part by the expectation that increased use of technology will improve the quality and flexibility of learning (Bush 1945; Cunningham et al. 2000; Bates 2001; Cuban 2001; DfES 2003; Oppenheimer 2003) combined with a changing focus on learners rather than teachers and institutions (Oblinger & Maruyama 1996; Buckley 2002; Laurillard 2002). In a recent Sloan survey (Allen & Seaman, 2006) approximately 60% of US college Chief Academic Officers felt that e-learning was “critical to the long-term strategy of their institution.”

This investment has been supported by the widespread adoption of Learning Management Systems (Zemsky & Massy, 2004) as well as the computerization of other key administrative functions (Hawkins & Rudy, 2006, p. 52) and the investment of internal and external funding on e-learning project work (Alexander 1999, Alexander & McKenzie 1998). There has been a growing recognition that a mature and integrated institutional infrastructure is needed to ensure reliable and cost-effective provision of e-learning opportunities to students (Reid, 1999; Holt et al., 2001). Whether significant improvements in the quality of the student experience have been achieved remains, however, unclear (Conole, 2000; Kenny, 2001; Radloff, 2001; Taylor, 2001; GAO 2003; Zemsky & Massy, 2004).

Institutional leadership must consider the implications for e-learning of resource utilization (Karelis 1999), sustainability (Strauss 2002; Young 2002), scalability and reusability (Bain 1999; IEEE 2002; Boyle 2003) and management (Laurillard 1997; Reid 1999). There is a need for leadership, guidance and vision in implementing e-learning that delivers real educational value to students while also being sustainable for the institution in the long term (Strauss, 2002).

Failures such as that of the UK e-University (Garrett, 2004) and the US Open University (Meyer, 2006) illustrate the challenges that face organizations depending on technology for the delivery of programmes. While the large scale educational benefits of e-learning remain difficult to demonstrate, the use of IT systems for business and administrative activities has become mainstream, and even strategically irrelevant as differentiators between organizations (Carr, 2003).

The need to ensure that the organisational aspects of e-learning are supported as well as the pedagogical and technological is now being recognised (Ryan et al. 2000; Bates 2001) and this includes an understanding of the wider, systems context within which e-learning is situated (Laurillard 1997, 1999; Ison 1999) and the need for leadership, strategic direction and collaboration within an organization (Hanna 1998; Reid, 1999). Woodill and Pasian's (2006) review of the management of e-learning projects demonstrates the limited extent to which formal project management tools and systems are currently evident.

In essence, a development of organisational maturity is needed for institutions to benefit from their investment in e-learning. Organizational maturity captures the extent to which activities supporting the core business are explicit, understood, monitored and improved in a systemic way by the organization. Organizational maturity in the context of e-learning projects requires a combination of capabilities. As well as a clear understanding of the pedagogical aspects, project teams must be able to design and develop resources and tools, provide a reliable and robust infrastructure to deploy those resources and tools, support staff and students using them, and finally place their efforts within a strategically driven environment of continuous improvement. While individual staff may be enthusiastic and skilled, the ability of an institution to support and develop this wider set of capabilities is key to the ongoing sustainability of their work. In the context of the eMM, institutions that have greater organization maturity in e-learning are described as having greater capability (see below).

Laurillard (1997) has noted that the challenge in stimulating the effective use of e-learning resources and approaches beyond early adopters is to identify the limitations of current practices and consequently how strength in e-learning capability can be incrementally improved. Systematic and incremental improvement must encompass academic, administrative and technological aspects (Jones, 2003) combined with careful integration into the strategies and culture of the institution (Remeyni et al., 1997). As noted by Fullan:

The answer to large-scale reform is not to try to emulate the characteristics of the minority who are getting somewhere under present conditions … Rather, we must change existing conditions so that it is normal and possible for a majority of people to move forward (Fullan 2001, p. 268)

The rapidly evolving nature of the technologies used for e-learning is an additional challenge. Technologies that are useful today are likely to be supplanted or significantly modified in a very short timeframe and new technologies are constantly being introduced in ways that redefine the opportunities available. This constant flux requires flexibility and an openness to change if institutions are to be responsive to the potential opportunities (Hamel & Välikangas, 2003). Institutions need an environment where the processes used to design, deploy and sustain e-learning are robust and effective, rather than ad-hoc and dependent on the energies and skills of particular individuals.

This challenge is not unique to e-learning and has similarly been encountered in the wider field of software engineering. Gibbs (1994) described the activities of those creating software in the late eighties and early nineties as “They have no formal process, no measurements of what they do and no way of knowing when they are on the wrong track or off the track altogether.” This criticism could easily be made of the e-learning activities undertaken in many educational institutions today.

One of the ways that the problem of improving the quality of software development was addressed was through the use of process benchmarking. Rather than focusing on particular technologies and measures, process benchmarking examines the quality and effectiveness of the systems and processes used to select, develop, deploy, support, maintain and replace technologies (Humphrey 1994).

In the field of software engineering, the Capability Maturity Model (CMM, Paulk et al. 1993) was developed to provide a framework for process benchmarking. The CMM proposed that organizations engaged in software development moved through five levels of “Maturity” in their process capabilities (Paulk et al. 1993). The CMM has been very successful in stimulating improvements in software development (SEI, 2008) and transfer of good practice between projects (Herbsleb et al. 1994; Lawlis et al. 1995). This success has seen a general adoption of the term 'maturity' to describe organisational effectiveness and a proliferation of maturity models in other domains (Copeland, 2003).

An educational version of the CMM, or e-learning Maturity Model (eMM), potentially has a number of benefits that were identified at its inception (Marshall and Mitchell, 2002) and which are evident to others working in the field (Griffith et al., 1997; Kirkpatrick, 2003; Underwood and Dillon, 2005):

Perhaps most importantly, like the CMM, an eMM can form the basis for an ongoing discussion within the e-learning community with a view to identifying the key processes and practices necessary for achieving sustainable and robust improvements in the quality of e-learning experienced by students.

International application of the eMM since its inception has seen many of these benefits realised and acknowledged publicly. Sector wide benefits are evident in the projects conducted in the UK (JISC, 2009; Sero, 2007) and New Zealand (Marshall, 2006a; Neal & Marshall, 2008) and in the use of eMM information to frame consideration of specific aspects of e-learning within sectors (Moore, 2005; Choat, 2006) including professional development of staff (Mansvelt et al., 2009; Capelli & Smithies, 2008). Individual institution's analysis of their capability (Petrova & Sinclair, 2005; University of London, 2008) is also matched by disciplinary specific activities (Lutteroth et al. 2007).

The following pages contain an abbreviated description of the eMM, further information can be found in Marshall (2006b) and on the eMM website: http://www.utdc.vuw.ac.nz/research/emm/

Capability

The most important concept embedded in the eMM is that of Capability, as this is what the model measures and it is designed to analyse and improve. Capability in the eMM builds on the more general concept of organizational maturity and incorporates the ability of an institution to ensure that e-learning design, development and deployment is meeting the needs of the students, staff and institution. Critically, capability includes the ability of an institution to sustain e-learning delivery and the support of learning and teaching as demand grows and staff change. Capability is not an assessment of the skills or performance of individual staff or students, but rather a synergistic measure of the coherence and strength of the environment provided by the organization they work within.

A more capable organization, under the eMM, has coherent systems that address each of the key e-learning processes (see following), it monitors whether these processes are delivering the desired outcomes (in measures it defines for itself), helps staff and students learn and engage with the process activities and deliverables, and systematically improves process to achieve pre-defined improvements.

A less capable institution engages in e-learning in an ad-hoc manner, with disconnected initiatives depending on the skills of individual staff, duplication due to a lack of knowledge of the work of others, and improvement by chance or personal pride. Successful initiatives are lost as staff change and managers lack information on the outcomes experienced by students and staff.

Capability is not just a function of whether the key processes are addressed. It is a summary of activities assessed over five dimensions that capture the organisational lifecycle associated with each key process.

Dimensions of Capability

Technology adoption models commonly present a hierarchical perspective of technology use by organizations. Models such as that proposed by Taylor (2001), Monson (2005) and the original CMM are designed and used in the presumption that technology use grows in complexity and effectiveness in an essentially linear, or progressive manner. The current version of the eMM, in contrast, has adopted the concept of dimensions to describe capability in each of the processes (Figure 1). Based on the original CMM levels, the five dimensions (Delivery, Planning, Definition, Management and Optimization) describe capability in a holistic and synergistic way.

Figure 1: eMM Process Dimensions

The Delivery dimension is concerned with the creation and provision of process outcomes. Assessments of this dimension are aimed at determining the extent to which the process is seen to operate within the institution.

The Planning dimension assesses the use of predefined objectives and plans in conducting the work of the process. The use of predefined plans potentially makes processes more able to be managed effectively and reproduced if successful.

The Definition dimension covers the use of institutionally defined and documented standards, guidelines, templates and policies during the process implementation. An institution operating effectively within this dimension has clearly defined how a given process should be performed. This does not mean that the staff of the institution understands and follows this guidance.

The Management dimension is concerned with how the institution manages the process implementation and ensures the quality of the outcomes. Capability within this dimension reflects the measurement and control of process outcomes.

The Optimization dimension captures the extent to which an institution is using formal and systematic approaches to improve the activities of the process to achieve pre-defined objectives. Capability of this dimension reflects a culture of continuous improvement.

The dimensional approach avoids the model imposing a particular mechanism for building capability, a criticism that has been made of the original CMM (Bach, 1994) it also helps ensure that the objective of improving capability is not replaced with the artificial goal of achieving a higher maturity level. Organizations which have achieved capability in all of the dimensions of the eMM are, by definition, able to use the high level of awareness of their activities that the delivery, planning, definition and management dimensions provide to drive the efficient and flexible change processes measured by the optimization dimension. Indeed, a less capable organization may find themselves focusing on documentation and process over outcomes as a consequence of failing to address the concerns of the optimization dimension of individual processes.

Processes

Recognition of the potential offered by an eMM led to the development of an initial version (Marshall & Mitchell, 2003; 2004) building on the SPICE (Software Process Improvement and Capability Determination) framework (SPICE 1995). The process areas of the first version of the eMM process set was populated using the Seven Principles of Chickering and Gamson (1987) and the Quality on the Line benchmarks (IHEP, 2000) as outlined in Marshall & Mitchell (2004). These heuristics were selected as being widely accepted descriptions of necessary activities for successful e-learning initiatives. Obviously, it would be better to use empirically well-supported benchmark items with a substantial evidence base proving their utility, however the weaknesses of the current e-learning evidence base (Conole et al., 2004; Mitchel, 2000; Zemsky & Massy, 2004) mean that heuristics must instead be used. A goal of this initial model was to start evaluating the utility of these initial processes so that they could be refined and expanded upon.

The current version of the eMM (Marshall, 2006b) divides the capability of institutions to sustain and deliver e-learning into thirty five processes grouped into five major categories or process areas (Table 1) that indicate a shared concern. It should be noted however that all of the processes are interrelated to some degree, particularly through shared practices and the perspectives of the five dimensions. Each process in the eMM is broken down within each dimension into practices that define how the process outcomes might be achieved by institutions (Figure 2). The practice statement s attempt to capture directly measureable activities for each process and dimension. The practices are derived from an extensive review of the literature, international workshops and experience from their application (Marshall 2008).

![]()

Figure 2: Relationships between processes, practices and dimensions

Table 1. eMM Version 2.3 Processes (revised from Marshall 2006b)

Learning: Processes that directly impact on pedagogical aspects of e-learning

L1. Learning objectives guide the design and implementation of courses.

L2. Students are provided with mechanisms for interaction with teaching staff and other students.

L3. Students are provided with e-learning skill development.

L4. Students are provided with expected staff response times to student communications.

L5. Students receive feedback on their performance within courses.

L6. Students are provided with support in developing research and information literacy skills.

L7. Learning designs and activities actively engage students.

L8. Assessment is designed to progressively build student competence.

L9. Student work is subject to specified timetables and deadlines.

L10. Courses are designed to support diverse learning styles and learner capabilities.

Development: Processes surrounding the creation and maintenance of e-learning resources

D1. Teaching staff are provided with design and development support when engaging in e-learning.

D2. Course development, design and delivery are guided by e-learning procedures and standards.

D3. An explicit plan links e-learning technology, pedagogy and content used in courses.

D4. Courses are designed to support disabled students.

D5. All elements of the physical e-learning infrastructure are reliable, robust and sufficient.

D6. All elements of the physical e-learning infrastructure are integrated using defined standards.

D7. E-learning resources are designed and managed to maximise reuse.

Support: Processes surrounding the support and operational management of e-learning

S1. Students are provided with technical assistance when engaging in e-learning.

S2. Students are provided with library facilities when engaging in e-learning.

S3. Student enquiries, questions and complaints are collected and managed formally.

S4. Students are provided with personal and learning support services when engaging in e-learning.

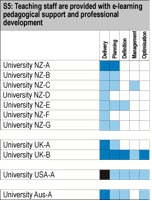

S5. Teaching staff are provided with e-learning pedagogical support and professional development.

S6. Teaching staff are provided with technical support in using digital information created by students.

Evaluation: Processes surrounding the evaluation and quality control of e-learning through its entire lifecycle

E1. Students are able to provide regular feedback on the quality and effectiveness of their e-learning experience.

E2. Teaching staff are able to provide regular feedback on quality and effectiveness of their e-learning experience.

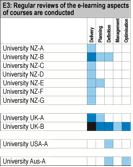

E3. Regular reviews of the e-learning aspects of courses are conducted.

Organization: Processes associated with institutional planning and management

O1. Formal criteria guide the allocation of resources for e-learning design, development and delivery.

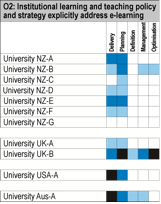

O2. Institutional learning and teaching policy and strategy explicitly address e-learning.

O3. E-learning technology decisions are guided by an explicit plan.

O4. Digital information use is guided by an institutional information integrity plan.

O5. E-learning initiatives are guided by explicit development plans.

O6. Students are provided with information on e-learning technologies prior to starting courses.

O7. Students are provided with information on e-learning pedagogies prior to starting courses.

O8. Students are provided with administration information prior to starting courses.

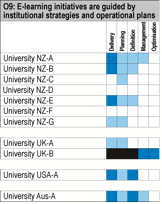

O9. E-learning initiatives are guided by institutional strategies and operational plans.

The eMM has been applied in New Zealand across both the university (Marshall, 2006a) and vocational sectors (Neal and Marshall, 2008), the United Kingdom in both the university (Bacsich, 2006; 2008) and vocational sectors (Sero, 2007) and is currently being applied in universities in Australia, the United States (Marshall et al. 2008) and Japan. In total, nearly 80 different institutions have received assessments of their e-learning capability using the eMM.

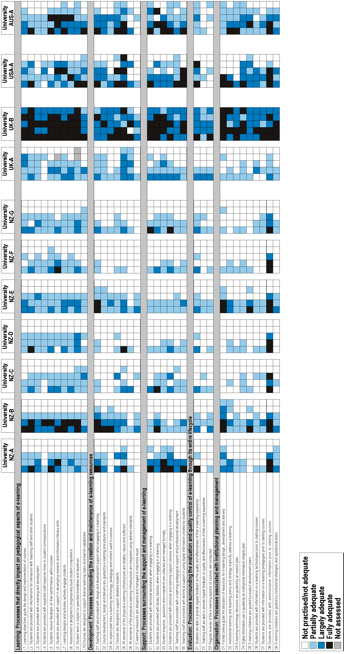

Figure 3 below presents a small sample of recent university assessments undertaken in New Zealand, Australia, the UK and the USA in order to illustrate how the assessments are visualised and how this information can be used to assess institutional strengths and weaknesses. Each main column in this figure contains the results for a single university with black squares indicating Fully Adequate capability, dark blue Largely Adequate capability, light blue Partially adequate capability, and white no capability. The sub-columns correspond to the five dimensions of the eMM ordered from left to right (as shown in Figure 1). Visually, this 'carpet' of boxes provides a means of reviewing the capabilities of the institutions and identifying patterns of capability within or across the assessed institutions. The small size of the image helps the analysis by encouraging the eye to see the whole pattern, rather than focussing on specific processes. Some institutions are clearly more capable (darker) than others (lighter), consistent with the different priorities individual institutions have placed on e-learning. No institution, however, is entirely black or entirely white; all have areas where they could make significant improvements in their capability.

Looking at the column for a single institution visualised in Figure 3, such as that for University NZ-B, shows that while some groups of processes are relatively strong (such as the block of Learning processes at the top), others (such as the Support and Evaluation processes) are not. This information can start to guide managers and leaders towards areas that may require prioritisation, with the benefit of being visually clear to most audiences when explaining the rationale for focusing on those issues.

Figure 3: eMM assessments of international universities

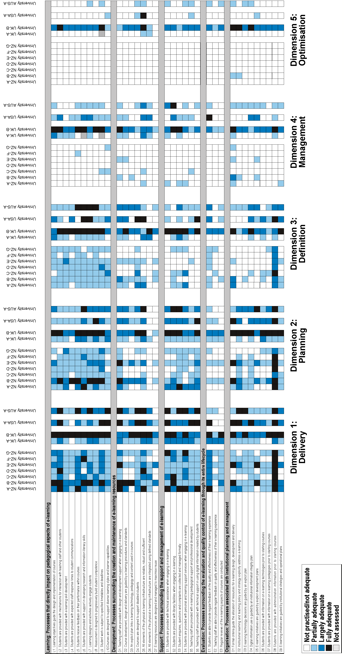

Comparing the institutional assessments in Figure 3 reveals a gradient of capability from left to right within each set of results, suggesting stronger capability in the Delivery dimension and weakest in the Optimization dimension. This can be seen more obviously in Figure 4 which sorts the assessments by dimension and groups each institutions assessment for each dimension together. This clearly shows that while capability in the Delivery dimension is generally strong, that in the Management and Optimization dimensions is very much less so. This reflects the observation that many institutions are struggling to monitor and measure their own performance in e-learning (Management dimension) and that a culture of systematic and strategically-led continuous improvement of e-learning is lacking also (Optimization dimension).

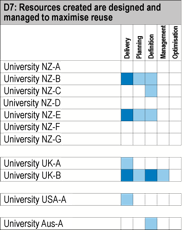

The assessment information can also be displayed on an individual process basis to highlight issues that may be common across all institutions. The results for these institutions for Process D7 (E-learning resources are designed and managed to maximise reuse) are shown in Figure 5. This clearly shows that even in institutions that are otherwise very capable (such as university UK-B) this process is not one with strong capability. This is consistent with the observation that despite the obvious attraction of reuse on cost and efficiency grounds, large-scale adoption of reuse approaches such as Reusable Learning Objects has not occurred (Barker et al. 2004). This suggests that the conception of reuse in the literature has not been persuasive and that in reality a number of significant barriers to the creation, storage and reuse of educational materials remain.

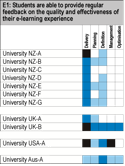

Looking at Figure 3, it is also apparent that a band of weak capability runs across most institutions in the Evaluation processes. These are shown individually in Figure 6. Here, it is apparent that most capability is limited to the Delivery dimension of process E1 (Students are able to provide regular feedback on the quality and effectiveness of their e-learning experience). The results for processes E1 and E3 reflects the use of pre-existing student feedback mechanisms, but these have not been changed to reflect the use of technology by institutions. Commonly, institutions are assuming that students will complain if some aspect of their e-learning experience is not adequate, rather than actively ensuring that students are using the systems to best advantage. This is consistent with the overall weak capability noted above in the Management dimension.

Figure 4: eMM assessments of international universities arranged by dimension

Figure 5: Process D7 capabilities for eleven international universities

Figure 6: Evaluation process capabilities for eleven international universities

By comparison with E1 and E3, process E2 (Teaching staff are able to provide regular feedback on quality and effectiveness of their e-learning experience) is weaker still (Figure 6). Very little evidence is seen in most institutions of formal and systematic attempts to ensure that staff are able to work effectively in e-learning contexts. This lack of engagement with the need to develop staff skills is also readily apparent in the results for process S5 (Teaching staff are provided with e-learning pedagogical support and professional development) shown in Figure 7. These assessments reflect the common use of optional workshops and webpages without formal assessments of staff skills or any requirement that staff be trained in the use of e-learning.

Figure 7: Process S5 capabilities for eleven international universities

Finally, the weak capability in the Organization processes, especially processes O2 (Institutional learning and teaching policy and strategy explicitly address e-learning) and O9 (E-learning initiatives are guided by institutional strategies and operational) shown in Figure 8, and the Management and Optimization dimensions across the entire result set (Figure 4) is a concern. It suggests that the leadership of most of the institutions assessed have yet to define clear directions for e-learning at their institutions. This is consistent with the absence of evidence generally that e-learning has radically changed organisational activities (Weedon et al. 2004). Keegan et al. (2006) have also noted that significant innovations are commonly linked to external funding and the work of individuals, and that long-term organizational impact and sustainability is questionable.

Figure 8: Process O2 and O9 capabilities for eleven international universities

As outlined above, the eMM combines key features of benchmarking and capability maturity models with those of observational e-learning quality frameworks in order to create a quality framework for improving organisational e-learning maturity. A variety of observational frameworks exist to explore the quality of e-learning and it is useful to contrast these with the eMM to explain the benefits of the eMM's conception of e-learning maturity.

At the level of the individual innovation there are the well-established models of Rogers (2003) and Moore (1999), which provide explanations of the adoption of innovation by individuals and provide mechanisms for encouraging adoption in the general sense. These are popular as a means of describing why so few innovations are adopted, but more work is needed to turn this type of model into a tool for enhancing e-learning technology use by organizations (Moser, 2007).

Technology based observational models like those proposed by Taylor (2001) and Monson (2005) describe the increasing complexity of technology use as new technologies build upon the old. While any e-learning model must acknowledge technology, building dependencies on specific technologies is risky as it implies that deployment of technology drives success; a recipe for expensive failures. There is also the issue that technology is changing at an ever greater pace (Kurzweil, 2005) making the maintenance of the currency of such models an ongoing challenge.

Organisationally focussed observation models like CITSCAPE and MIT90 (Weedon et al. 2004, de Freitas, 2006) and many “maturity” models (Neuhauser, 2004) describe the increasing sophistication of organisational engagement with technology supported change and innovation, but in merely describing what is happening they fail to provide a mechanism for supporting and enhancing that change.

In contrast, quality assurance frameworks impose a particular, normally detailed, compliance model upon organisational activities (Chao et al., 2006). Often, they provide a strong description of necessary activities in a particular context, including e-learning, but these models need constant revision to remain relevant. Compliance models also have the problem that the measurement outcomes become potentially more important than the improvement of capability, and, compliance is almost always a backwards, historical, view of an organization, not something that empowers change and growth.

The eMM, in contrast, provides a mechanism for supporting and motivating change. The benchmarking aspects of the model provide a clear picture of an organization's current capabilities and describe the practices that are needed to improve capability. By providing a clear picture of an institution's strengths and weaknesses, combined with a pathway for improving capability, the eMM provides a mechanism for organizations to determine their own priorities, with sufficient flexibility to select technologies and pedagogies that are appropriate to their learners, staff and stakeholder expectations.

In adopting a process driven description of maturity and capability, where capability is defined by specific practices, it is essential that the warning expressed by Hagner (2001, p. 31) be heeded:

“…the author had envisioned the presentation of a wide range of 'best practices' that would resemble a menu-like opportunity for interested institutions to choose from. This original intent was misguided. … 'cherry-picking' a variety of practices is not recommended. Instead of focusing on 'best practices', a more profitable emphasis should be placed on 'best systems.'”

Taylor (2001) observed that the challenge facing universities engaging in e-learning is not so much about innovation as it is about execution and the need to rapidly evolve to sustain change at the pace technology is changing. A similar observation has been made by Hamel and Valikangas (2003) with their concept of strategic resilience and the need for organizations to constantly reinvent their business models and systems before circumstances force change. Institutions need to be ready to reinvent themselves and make purposeful and directed changes in response to new technologies and pedagogies in order to become more 'mature', and the eMM is intended to help understand and guide that process, evolving itself as our understanding grows.

Finally, one of the outcomes of the eMM assessments undertaken to date has been the illustration of the reality that all institutions have particular strengths that can serve as a strong foundation for change and which can help others struggling to develop their own capability.

E-learning, rather than a threat or special form of learning, is potentially an opportunity for growth, building on the identified strengths of the institution and learning from other institutions, sectors and countries, addressing the weaknesses identified by the eMM capability assessment and developing into a mature e-learning institution.

Alexander, S. (1999). An evaluation of innovative projects involving communication and information technology in higher education. Higher Education Research & Development, 18(2), 173-183.

Alexander, S. & McKenzie, J. (1998). An evaluation of Information Technology projects for university learning. CAUT, Canberra: Australian Government Publishing Service.

Allen, I.A., & Seaman, J. (2006). Making the grade: Online education in the United States, 2006. Needham, Sloan Consortium. Retrieved April 19, 2007, from:

http://www.sloan-c.org/publications/survey/pdf/making_the_grade.pdf

Bach, J. (1994). The immaturity of CMM. American Programmer, 7(9), 13-18.

Bacsich, P. (2006). Higher Education Academy benchmarking pilot final public report on Pick&Mix and eMM pilots. Retrieved February 25, 2009, from: http://elearning.heacademy.ac.uk/weblogs/benchmarking/wp-content/uploads/2006/09/bacsich-report-public20060901.doc

Bacsich, P. (2008). Benchmarking phase 2 overview report. Retrieved February 25, 2009, from: http://elearning.heacademy.ac.uk/weblogs/benchmarking/wp-content/uploads/2008/04/BenchmarkingPhase2_BELAreport.pdf

Bain, J. (1999). Introduction to the special edition. Learner-centred evaluation of innovations in higher education. Higher Education Research and Development, 18(1), 57-75.

Barker, E., James, H., Knight, G., Milligan, C., Polfreman, M., & Rist, R. (2004). Long-term retention and reuse of e-learning objects and materials. Report Commissioned by the Joint Information Systems Committee (JISC). Retrieved 25 February, 2009, from: http://www.jisc.ac.uk/uploaded_documents/LTR_study_v1-4.doc

Bates, T. (2001). The continuing evolution of ICT capacity: The implications for education. In G. M. Farrel (Ed.), The changing face of virtual education. (pp. 29-46). Vancouver: The Commonwealth of Learning.

Boyle, T. (2003) Design Principles for Authoring Dynamic, Reusable Learning Objects. Australian Journal of Educational Technology, 19(1), 46-58.

Buckley, D.P. (2002, January/February) In pursuit of the learning paradigm: Coupling faculty transformation and institutional change. EduCause Review, 29-38.

Bush, V. (1945, Aug. 1945). As we may think. Atlantic Monthly, 101-108. Retrieved 25 February, 2009, from: http://www.theatlantic.com/doc/194507/bush

Cappelli, T,. & Smithies, A. (2008). Learning Needs Analysis through synthesis of Maturity Models and Competency Frameworks. In K. McFerrin et al. (Eds.), Proceedings of Society for Information Technology and Teacher Education International Conference 2008 (pp. 2448-2455). Chesapeake, VA: AACE. Retrieved 10 May, 2009, from: http://www.editlib.org/d/27579/proceeding_27579.pdf

Carr, N. (2003). IT doesn’t matter. Harvard Business Review, 81(5), 41-49.

Chao, T., Saj, T., & Tessier, F. (2006). Establishing a quality review for online courses. Educause Review, 29(3). Retrieved 25 February, 2009, from: http://www.educause.edu/apps/eq/eqm06/eqm0635.asp

Chickering, A. W., & Gamson, Z. F. (1987). Seven principles for good practice in undergraduate education. AAHE Bulletin, 39(7), 3-7.

Choat, D. (2006). Strategic review of the tertiary education workforce: Changing work roles and career pathways. Wellington, NZ: Tertiary Education Commission.

Conole, G., Oliver, M., & Harvey, J. (2000). Toolkits as an Approach to Evaluating and Using Learning Material. 17th Annual ASCILITE Conference. Coffs Harbour: Southern Cross University.

Conole, G., Oliver, M., Isroff, K., & Ravenscroft, A. (2004). Addressing methodological issues in elearning research. Networked Learning Conference 2004. Lancaster University. Retrieved 25 February, 2009, from: http://www.shef.ac.uk/nlc2004/Proceedings/Symposia/Symposium4/Conole_et_al.htm

Copeland, L. (2003). The Maturity Maturity Model (M3). Retrieved 25 February, 2009, from: http://www.stickyminds.com/sitewide.asp?Function=edetail&ObjectType=COL&ObjectId=6653

Cuban, L. (2001). Oversold and underused: Computers in the classroom. Cambridge: Harvard University Press.

Cunningham, S., Ryan, Y., Stedman, L., Tapsall, S., Bagdon, K., Flew, T. & Coaldrake, P. (2000). The business of borderless education. Canberra: DETYA.

DfES (2003). Towards a unified e-learning strategy. Department for Education and Skills, UK. Retrieved 25 February, 2009, from: http://www.dcsf.gov.uk/consultations/downloadableDocs/towards%20a%20unified%20e-learning%20strategy.pdf

El Emam, K. Drouin, J-N., & Melo, W. (1998). SPICE: The theory and practice of software process improvement and capability determination. California: IEEE Computer Society.

de Freitas, S. (2006). Think piece for Becta on the ‘e-mature learner.’ Retrieved 25 February, 2009, from: http://tre.ngfl.gov.uk/uploads/materials/24877/Think_piece_for_BectaSdF.doc

Fullan, M. (2001). The new meaning of educational change. New York: Teacher’s College Press.

GAO (2003). Military transformation: Progress and challenges for DOD’s Advanced Distributed Learning programs. United States General Accounting Office report GAO-03-393.

Garrett, R. (2004). The real story behind the failure of the U.K. eUniversity. Educause Quarterly, 4, 4-6.

Gibbs, W. (1994, September). Software's chronic crisis. Scientific American, 86-95.

Griffith, S.R., Day, S.B., Scott, J.E., & Smallwood, R.A. (1997). Progress made on a plan to integrate planning, budgeting, assessment and quality principles to achieve institutional improvement. Paper presented at the Annual Forum of the Association for Institutional Research (36th, Albuquerque, NM, May 5-8, 1996). AIR Professional File Number 66, The Association for Institutional Research for Management Research, Policy Analysis and Planning.

Hagner, P.R. (2001). Interesting practices and best systems in faculty engagement and support. Paper presented at the National Learning Infrastructure Initiative Focus Session, Seattle, USA.

Hamel, G., & Valikangas, L. (2003, September). The quest for resilience. Harvard Business Review, 52-63.

Hanna, D. E. (1998). Higher education in an era of digital competition: Emerging organizational models. Journal of Asynchronous Learning Networks, 2(1), 66-95.

Hawkins, B. L. & Rudy, J.A. (2006). Educause core data service: Fiscal year 2006 summary report. Educause.

Herbsleb, J., Carleton, A, Rozum, J., Siegel, J., & Zubrow, D. (1994). Benefits of CMM-based software process improvement: Initial results. Pittsburgh: Software Engineering Institute, Carnegie Mellon.

Holt, Dale, Rice, M. Smissen, I., & Bowly, J. (2001). Towards institution-wide online teaching and learning systems: Trends, drivers and issues. Proceedings of the 18th ASCILITE Conference (pp 271-280). Melbourne: University of Melbourne.

Humphrey, W. S. (1994). The personal software process. Software Process Newsletter, 13(1), 1-3.

IEEE (2002). 1484.12.1 IEEE Standard for Learning Object Metadata. Retrieved 25 February, 2009, from: http://ltsc.ieee.org/wg12/index.html

IHEP (2000). Quality on the line: Benchmarks for success in internet-based distance education. The Institute for Higher Education Policy. Retrieved 25 February, 2009, from: http://www.ihep.org/Publications/publications-detail.cfm?id=69

Ison, R. (1999). Applying systems thinking to higher education. Systems Research and Behavioural Science, 16, 107-112.

JISC (2009). The e-Learning Benchmarking and Pathfinder Programme 2005-08—An overview. Bristol, UK: Joint Information Services Committee.

Jones, S. (2003). Measuring the quality of higher education: Linking teaching quality measures at the delivery level to administrative measures at the university level. Quality in Higher Education, 9(3), 223-229.

Karelis, C. (1999). Education Technology and Cost Control: Four models. Syllabus Magazine, 12(6), 20-28.

Keegan, D., Paulsen, M. F., & Rekkedal T. (2006). Megatrends in e-learning provision—Literature review. Paper prepared for the EU Leonardo MegaTrends Project. Retrieved 25 February, 2009, from: http://www.nettskolen.com/in_english/megatrends/Literature_review.pdf

Kenny, J. (2001). Where academia meets management: A model for the effective development of quality learning materials using new technologies. Proceedings of the 18th ASCILITE Conference (pp. 327-334). Melbourne: University of Melbourne.

Kirkpatrick, D. (2003). Supporting E-learning in Open and Distance Education. Proceedings of the 17th annual AAOU conference, Bangkok, Thailand. Retrieved 6 January, 2006, from: http://www.stou.ac.th/AAOU2003/Full%20Paper/Denise.pdf

Kurzweil, R. (2005). The singularity is near. New York: Penguin.

Laurillard, D. (1997). Applying systems thinking to higher education. Milton Keynes: Open University.

Laurillard, D. (1999). A conversational framework for individual learning applied to the learning organisation and the learning society. Systems Research and Behavioural Science, 16, 113-122.

Laurillard, D. (2002). Rethinking university teaching: A conversational framework for the effective use of learning technologies (2nd ed.). London: Routledge Falmer.

Lawlis, P. K. Flowe, R. M., & Thordahl, J. B. (1995). A correlational study of the CMM and software development performance. Crosstalk: The Journal of Defense Software Engineering, 8(9), 21-25.

Lutteroth, C., Luxton-Reilly, A., Dobbie, G., & Hamer, J. (2007). A Maturity Model for Computing Education. In Proceedings of the Ninth Australasian Computing Education Conference (ACE2007), Ballarat, Australia. CRPIT, 66. ACS. 107-114.

Mansvelt, J., Suddaby, G., O’Hara, D., & Gilbert, A. (2009). Professional Development: Assuring Quality in E-learning Policy and Practice. Quality Assurance in Education, 17(3). Retrieved May 10, 2009, from: http://www.emeraldinsight.com/Insight/viewContentItem.do?contentType=Article&hdAction=lnkpdf&contentId=1785025&history=false

Marshall, S. (2005). Determination of New Zealand tertiary institution e-learning capability: An application of an e-learning maturity model. Report to the New Zealand Ministry of Education. 132pp. Retrieved January 10, 2006, from: http://www.utdc.vuw.ac.nz/research/emm/documents/SectorReport.pdf

Marshall, S. (2006a). New Zealand tertiary institution e-learning capability: Informing and guiding e-learning architectural change and development. Report to the New Zealand Ministry of Education. 118pp. Retrieved September 19, 2006, from: http://www.utdc.vuw.ac.nz/research/emm/

Marshall, S. (2006b). eMM version two process guide. Wellington: Victoria University of Wellington. Retrieved October 9, 2009, from: http://www.utdc.vuw.ac.nz/research/emm/documents/versiontwothree/20070620ProcessDescriptions.pdf

Marshall, S. J. (2008). What are the key factors that lead to effective adoption and support of e-learning by institutions? HERDSA 2008 Conference. Rotorua: HERDSA.

Marshall, S., & Mitchell, G. (2002). An e-learning maturity model? 19th ASCILITE Conference. Auckland: Unitec. Retrieved January 10, 2006, from: http://www.unitec.ac.nz/ascilite/proceedings/papers/173.pdf

Marshall, S., & Mitchell, G. (2003). Potential indicators of e-learning process capability. Educause in Australasia 2003. Adelaide: Educause. Retrieved January 10, 2006, from: http://www.utdc.vuw.ac.nz/research/emm/documents/1009anav.pdf

Marshall, S., & Mitchell, G. (2004). Applying SPICE to e-learning: An e-learning maturity model? Sixth Australasian Computing Education Conference. Retrieved January 10, 2006, from: http://portal.acm.org/ft_gateway.cfm?id=979993&type=pdf&coll=GUIDE&dl=GUIDE&CFID=62903527&CFTOKEN=3085292

Marshall, S., & Mitchell, G. (2005). E-learning process maturity in the New Zealand tertiary sector: Educause in Australasia 2005. Auckland: Educause. Retrieved January 10, 2006, from: http://www.utdc.vuw.ac.nz/research/emm/documents/E-LearningProcessMaturity.pdf

Marshall, S., & Mitchell, G. (2006). Assessing sector e-learning capability with an e-learning maturity model. Association for Learning Technologies Conference, 2006. Edinburgh: ALT.

Marshall, S.J., & Mitchell, G. (2007). Benchmarking international e-learning capability with the e-learning maturity model: Educause in Australasia 2007. Melbourne, Educause. Retrieved January 8, 2008, from: http://www.caudit.edu.au/educauseaustralasia07/authors_papers/Marshall-103.pdf

Marshall, S., Udas, K. and May, J. (2008). Assessing online learning process maturity: The e-learning maturity model. 14th Annual Sloan-C International Conference on Online Learning. Orlando: Sloan.

Meyer, K.A. (2006). The closing of the U.S. open university. Educause Quarterly, 29(2). Retrieved February 25, 2009, from: http://www.educause.edu/apps/eq/eqm06/eqm0620.asp

Mitchel, P. (2000). The impact of educational technology: A radical reappraisal of research methods. In Squires, D., Conole, G. & Jacobs, G. (Eds), The changing face of learning technology. Cardiff: University of Wales Press.

Monson, D. (2005). Blackboard Inc., An Educause platinum partner—the educational technology framework: charting a path toward a networked learning environment. Educause Western Regional Conference, April 26-28, 2005, San Francisco, CA, USA. San Francisco: Educause. Retrieved 25 February 2009, from: http://www.educause.edu/WRC05/Program/4954?PRODUCT_CODE=WRC05/CORP05

Moore, G. A. (1999). Crossing the chasm: Marketing and selling high-tech products to mainstream customers. New York: HarperBusiness.

Moore, P. (2005). Information literacy in the New Zealand education sector. Paper presented at Asia and the Pacific Seminar-Workshop on Educational Technology, Lifelong learning and information literacy, Asia and the Pacific Programme of Educational Innovation for Development (APEID), Tokyo Gakugei University, 5-9 September, 2005. Tokyo, Japan. Retrieved 17 July 2009, from:

http://gauge.u-gakugei.ac.jp/apeid/apeid05/CountryPapers/NewZealand.pdf

Moser, F.Z. (2007). Faculty Adoption of Educational Technology. Educause Quarterly, 30(1). Retrieved 25 February 2009, from: http://www.educause.edu/apps/eq/eqm07/eqm07111.asp

Neal, T., & Marshall, S. (2008). Report on the distance and flexible education capability assessment of the New Zealand ITP sector. Confidential Report to the New Zealand Tertiary Education Committee and Institutes of Technology and Polytechnics Distance and Flexible Education Steering Group. 82pp.

Neuhauser, C. (2004). A maturity model: Does it provide a path for online course design? The Journal of Interactive Online Learning, 3(1). Retrieved 25 February 2009, from: http://www.ncolr.org/jiol/issues/PDF/3.1.3.pdf

Oblinger, D. G., & Maruyama, M. K. (1996). Distributed Learning. CAUSE Professional Paper Series, No. 14. Retrieved 27 June, 2006, from: http://eric.ed.gov/ERICDocs/data/ericdocs2/content_storage_01/0000000b/80/26/ca/d3.pdf

Oppenheimer, T. (2003). The flickering mind. RandomHouse, New York.

Paulk, M. C., Curtis, B., Chrissis, M. B., & Weber, C. V. (1993). Capability Maturity Model, Version 1.1. IEEE Software, 10(4), 18-27.

Petrova, K. and Sinclair, R. (2005). Business undergraduates learning online: A one semester snapshot. International Journal of Education and Development using ICT, 1(4). Retrieved 17 July, 2009, from: http://ijedict.dec.uwi.edu/include/getdoc.php?id=864&article=100&mode=pdf

Radloff, A. (2001). Getting Online: The challenges for academic staff and institutional leaders. Proceedings of the 18th ASCILITE Conference, 11-13.

Reid, I. C. (1999). Beyond models: Developing a university strategy for online instruction. Journal of Asynchronous Learning Networks, 3(1), 19-31.

Remenyi, D., Sherwood-Smith, M., White, T. (1997.) Achieving maximum value from information systems: A process approach. John Wiley and Sons, New York.

Rogers, E. M. (2003). Diffusion of innovations (5th ed.). New York: Free Press.

Ryan, Y., B. Scott, Freeman, H. & Patel, D. (2000). The virtual university: The internet and resource-based learning. London: Kogan Page.

SEI (2008). Process maturity profile: CMMI® SCAMPISM class A appraisal results 2007 year-end update. Pittsburgh: Carnegie Mellon Software Engineering Institute. Retrieved February 25, 2009, from: http://www.sei.cmu.edu/appraisal-program/profile/pdf/CMMI/2008MarCMMI.pdf

Sero (2007). Baseline study of e-activity in Scotland’s colleges. Retrieved February 25, 2008, from http://www.sfc.ac.uk/information/information_learning/presentations_publications/sero_e_activity_study.pdf

SPICE (2005). Software process assessment version 1.00. Retrieved December 18, 2002, from: http://www-sqi.cit.gu.edu/spice/

Strauss, H. (2002, May/June). The right train at the right station. Educause Review, 30-36.

Taylor, J. (2001) Fifth generation distance education. Keynote Address presented at the 20th ICDE World Conference, Düsseldorf, Germany, 1-5 April 2001.

Underwood, J., & Dillon, G. (2005). Capturing complexity through maturity modelling. In B. Somekh and C. Lewin (Eds.), Research methods in the social sciences. London: Sage, 260-264.

University of London (2008). Benchmarking eLearning at the University of London: Report from the steering group. London: University of London. Retrieved 17 July 2008, from: http://www.londonexternal.ac.uk/quality/comte_zone/sys_tech_sub/stsc2/documents/stsc2_3.pdf

Weedon, E., Jorna, K., & Broumley, L. (2004). Closing the gaps in institutional development of networked learning: How do policy and strategy inform practice to sustain innovation? Proceedings of the Networked Learning Conference 2004, 1-7 April, 2004 at Lancaster University, UK. Retrieved 25 February 2008, from: http://www.networkedlearningconference.org.uk/past/nlc2004/proceedings/symposia/symposium8/weedon_et_al.htm

Woodill, G., & Pasian, B. (2006). eLearning project management: a review of the literature. In B. Pasian and G. Woodill (Eds), Plan to learn: Case studies in elearning project management. Dartmouth Nova Soctia, Canada: Canadian eLearning Enterprise Alliance, 4-10. Retrieved July 17, 2009, from:

http://www.celea-aceel.ca/DocumentHandler.ashx?DocId=1945

Young, B. A. (2002). The paperless campus. Educause Quarterly, 25(2), 4-5.

Zemsky, R., & Massy, W. F. (2004). Thwarted innovation: What happened to e-learning and why. The Learning Alliance at the University of Pennsylvania. Retrieved February 25, 2009, from: http://www.irhe.upenn.edu/Docs/Jun2004/ThwartedInnovation.pdf

Stephen Marshall (http://www.utdc.vuw.ac.nz/about/staff/stephen.shtml) is Acting Director and Senior Lecturer in Educational Technology at the VUW University Teaching Development Centre. E-Mail: stephen.marshall@vuw.ac.nz