VOL. 32, No. 1 2016

The development of critical thinking is a rationale for higher education and an important aspect of online educational discussions. A key component in most accounts of critical thinking is to evaluate the tenability of claims. The community of inquiry framework is among the most influential frameworks for research on online educational discussions. In this framework, cognitive presence accounts for critical thinking as progress through the following phases of inquiry: triggering event, exploration, integration, and solution

Le développement de la pensée critique est une justification pour l'enseignement supérieur et un aspect important des discussions éducatives en ligne. Un élément clé dans la plupart des comptes rendus de la pensée critique est d'évaluer la validité des revendications. Le cadre de référence Community of Inquiry se trouve parmi les cadres de référence les plus influents pour la recherche sur les discussions éducatives en ligne. Dans ce cadre de référence, la présence cognitive représente la pensée critique sous forme de progrès pendant les phases suivantes de l'enquête : événement déclencheur, exploration, intégration et solution.

Cet article traite du construit de la présence cognitive comme un outil pour mesurer la pensée critique. L'article retrace les inspirations philosophiques du cadre de référence Community of Inquiry et discute de la validité conceptuelle du construit de la présence cognitive. Les résultats empiriques activés par le cadre de référence sont brièvement examinés et discutés. L'auteur fait valoir que, puisque le construit de la présence cognitive aborde l'évaluation des participants à une discussion de la validité d’une revendication, seulement à un degré limité, le construit possède des faiblesses pour évaluer la pensée critique dans les discussions. En faisant cette revendication, l'article contribue aux discussions méthodologiques et théoriques au sujet de la recherche sur la pensée critique dans les discussions éducatives en ligne.

![]()

This work is licensed under a Creative Commons Attribution 3.0 Unported License.

Online discussions have become a widespread learning activity. The development of critical thinking is a rationale for higher education and a feature frequently examined in research about online discussions. A common method for assessing the quality of online discussions is analysis of discussion transcripts. Nevertheless, critical thinking is not easily defined or operationalized.

Researchers have suggested a large number of different approaches to operationalize critical thinking in online educational discussions. Weltzer-Ward (2011) identified 52 different research frameworks and coding schemes employed between 2002 and 2009 in research on such discussions, although not all of these focused on the critical thinking aspect. According to Weltzer-Ward, a lack of consistent tools hinders comparison of research results and the ability to build on previous analysis. She recommended that researchers should concentrate on a smaller number of frameworks, particularly those that have been most frequently applied in the research field. Among the most frequently applied are frameworks and schemes focusing on inquiry phases (Weltzer-Ward 2011). De Wever, Schellens, Valcke, and Van Keer (2006) discussed 15 different frameworks for content analysis of transcripts from online discussions. According to their research, coherence between the theoretical bases and operationalizations is questionable for a number of the frameworks they examined.

The Community of Inquiry (CoI) framework (Garrison, Anderson, & Archer, 1999; Garrison, Anderson, & Archer, 2001; Garrison, 2011) is widely claimed to be a leading research approach to e-learning in general and online educational discussions in particular (Gašević, Adesope, Joksimović, & Kovanović, 2015; Jézégou, 2010; Shea, 2010; Swan, Garrison, & Richardson, 2009; Weltzer-Ward, 2011). A significant number of studies based on this framework have recently been conducted (Gašević et al., 2015; Horzum & Uyanik, 2015; Lee, 2014; Shea et al., 2014). The framework is also applied through automatic coding software based on learning analytics (Kovanović, Gašević, Joksimović, Hatala, & Adesope, 2015). The CoI framework aims to describe how e-learning can support a collaborative approach to education that promotes deep and meaningful learning (Garrison et al., 1999; Garrison, Anderson, & Archer, 2010). According to the framework, a challenge for online education is to overcome distance and support several kinds of presence. The model suggests the following three distinct but overlapping constructs to assess online educational discussions: social presence, teaching presence, and cognitive presence.

|

| Figure 1: The Community of Inquiry model. Taken from Wikimedia Commons, originally published in Garrison et al. (1999) |

Social presence signifies participants’ ability to present themselves as “real people” in a purely textual medium and is characterized by emotional expression, open communication, and group cohesion. Teaching presence describes the design and facilitation of the educational experience. Cognitive presence aims to describe higher-order knowledge acquisition and critical thinking as progress through the phases of a triggering event, exploration, integration, and resolution.

The abundance of different frameworks and the variety of different approaches to critical thinking indicate that operationalizing critical thinking is not straightforward. Although the research literature is rich with different approaches, literature that discusses the adequacy and validity of the different frameworks is scarce. For example, there is some discussion about reliability issues (Garrison, Cleveland-Innes, Koole, & Kappelman, 2006), yet little critical discussion of the construct validity of the cognitive presence construct within CoI research. Two articles represent exceptions to this. Rourke and Anderson (2004) noted that one explanation for the observed low levels of cognitive presence might be a result of shortcomings in the coding protocol (p. 11). This observation has not generated much attention. Ho and Swan (2007) suggested an alternative approach to measuring cognitive presence. Their motivation was that established approaches to measuring cognitive presence had been “less successful” or “yielded disappointing results” (p. 4). Inspired by Grice’s (1989) cooperative principle, Ho and Swan (2007) suggested focusing on the communicative quality of discussants’ messages rather than on progress toward a solution. Even though their coding scheme has generated interesting empirical findings, the research field has not pursued this approach to measuring cognitive presence. Other researchers focus on how arguments are constructed. Such frameworks (Cho & Jonassen 2002; Weinberger & Fischer, 2006), inspired by Toulmin’s (1958/2003) work on informal logic, are commonly used in empirical research (Noroozi, Weinberger, Biemans, Mulder, & Chizari, 2013).

The aim of this article is to discuss the validity of cognitive presence as an operationalization of critical thinking. The research question that guides the discussion is as follows: How adequately is critical thinking in online educational discussions assessed by the cognitive presence construct from the CoI framework?

This examination of the cognitive presence construct contains the following components. First, it traces the philosophical origins of the construct and examines what ideas the framework pursues. Second, the coding scheme is compared with what I will describe as a “minimum conception” of critical thinking. The concept of construct validity guides the comparison. Third, empirical findings based on the framework are briefly reviewed by asking what kind of empirical findings the framework enables, and whether the empirical findings shed light on the measurement tool’s capability to assess critical thinking. By discussing these questions, the article contributes to methodological and theoretical debates in the research field. The article does not consider other aspects of the CoI framework, such as the operationalizations of social presence and teaching presence or the idea of distinguishing between different kinds of presences.

Before entering into the discussion of cognitive presence, I will briefly sketch the concepts of construct validity and critical thinking. Construct validity concerns whether an operationalization or coding scheme assesses what it is intended to assess. The development of critical thinking is a key rationale for higher education; however, consensus about how the concept should be interpreted has not been established. In the following, I will discuss some different approaches to critical thinking and sketch what I call a “minimum conception of critical thinking.” This minimum conception will be used as a tentative standard against which cognitive presence can be compared.

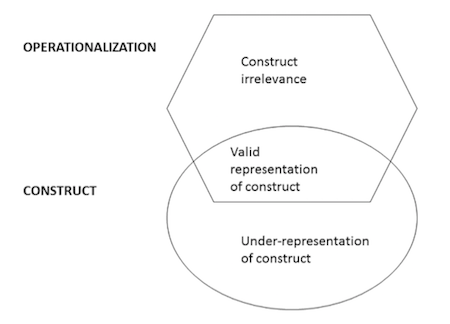

Validity in empirical research concerns the quality of the inferences that can be drawn from data (Messick, 1995). This includes considerations of representativeness and possibilities for generalization. When researching abstract and not directly observable phenomena, such as critical thinking, cognitive presence, and deep and meaningful learning, researchers need to operationalize these into observable indicators. Construct validity concerns whether the operationalizations into coding schemes and research tools assess what they are intended to assess. A coding scheme that validly operationalizes a construct will overlap it. The overlapping area represents the features of the construct that the coding scheme validly operationalizes as observable indicators (Kleven, 2008; Messick, 1995).

|

| Figure 2: Construct validity |

A coding scheme may specify indicators of a construct that are more or less contingent and sufficient to identify a construct. Drawing on Messick (1995) and Kleven (2008), two dimensions, construct relevance and construct representativeness, constitute the indicators’ construct validity.

Construct relevance has to do with whether an operationalization identifies indicators that really belong to the construct. Construct-irrelevant indicators are features that do not represent the construct, although they may be spuriously related to it. If an operationalization of critical thinking identifies indicators that do not necessarily represent critical thinking (e.g., the use of Latin terms), the indicators lack construct relevance. In such cases, utterances that do not demonstrate critical thinking may wrongly be interpreted as showing critical thinking.

Construct representativeness describes whether the operationalization is able to identify necessary, sufficient, or, at least, central features of the construct. When an operationalization fails to identify important features of critical thinking (like precise and unambiguous expressions), the operationalization is considered to be construct under-representative.

A construct-valid operationalization will make it possible to distinguish among and rank empirical instances according to the chosen construct. For research on critical thinking in online educational discussions, discussions (and eventually discussion posts) can thus be ranked according to the levels of critical thinking they contain.

Another aspect of the quality of inferences drawn from data concerns the reliability of coding. Reliability of coding describes how consistently different coders interpret and code the same empirical material (inter-rater reliability) and how consistently the same rater codes over time (intra-rater reliability). A number of studies have discussed issues concerning reliability in coding of discussion transcripts (De Wever et al., 2006; Garrison et al., 2006), pointing to the need for appropriate statistical measures. Fewer publications have addressed questions of construct validity, which is the focus of this article.

The development of critical thinking is an important rationale for higher education and plays a central role, both as a goal for and as a prerequisite of successful online discussions. A number of adjacent concepts, such as argumentation, reflection, analytical thinking, and rationality, are similar ideals for education. Within the learning sciences, concepts like deep learning (Biggs & Tang, 2007; Marton & Säljö, 1976a, 1976b) and higher-order cognitive processes(Anderson & Krathwohl, 2001) are related educational ideals. According to Siegel (1988/2013) and Lipman (1991/2003), the idea of critical thinking concerns what good thinking is and is closely related to reasonableness and rationality.

The value and importance of critical thinking and related concepts as educational rationales are supported with several reasons. First, rationality and critical investigation are at the core of science, academic study, and any kind of intellectual activity. Second, rationality is of vital importance in a world where what counts as valid knowledge is rapidly changing. Bluntly formulated, if knowledge keeps no better than fish, a way of checking the durability and validity of knowledge claims is urgently needed. Thus, critical thinking is vital in a knowledge- and innovation-driven economy (for further development of this point, see, for instance, Poce, Corcione, & Iovine, 2012, p. 50). Third, citizens who are critical thinkers and take part in an ongoing rational conversation on societal matters are vital for a democratic society. Dewey (1916/2007) and Habermas (1987) are paradigmatic proponents of this view. Fourth, the ability to think critically and independently is important in order to act as an autonomous subject. According to Siegel (2012) and Bailin and Siegel (2002), to be accepted as an independent center of consciousness, that is, a person, implies being able to perform criteria-governed thinking by oneself about who to be and how best to live. Fifth, providing, analyzing, and justifying claims imply cognitive elaboration and activation of prior knowledge and conceptual schemes, thereby stimulating students’ learning (Stegmann, Wecker, Weinberger, & Fischer, 2011).

Despite the widespread acknowledgement of the importance of critical thinking, there seems to be no established consensus regarding how the concept should be understood, much less operationalized. Davies and Barnett (2015), in their introduction to a comprehensive handbook on critical thinking in higher education, put it this way: “After more than four decades of dedicated scholarly work, critical thinking remains more elusive than ever” (p. 3).

A number of different conceptions of critical thinking can be found. By analyzing interviews with academics, Moore (2011a) identified several approaches to how critical thinking is understood. These include judgment (evaluating whether claims are sufficiently evidenced); rationality (evaluating the validity of inferences drawn from premises and judging whether a conclusion is sufficiently supported by premises); careful and sensitive readings of texts (both recognizing how a text’s assumptions are supported, and recognizing the text on its own premises and within its own context); a skeptical and provisional view of knowledge (which can be described as fallibilism); self-reflexivity (the ability to reflect on one’s own biases); and original thinking (seeing new connections, creativity). Finally, an ethical and activist stance toward contemporary problems is regarded as an additional feature of critical thinking. These themes are addressed in Davies and Barnett’s (2015) discussion on whether critical thinking should be regarded as a formal capacity for evaluating arguments; a political and activist orientation focused on identifying missing or concealed dimensions of meaning; or as a concern “with the development of the student as a person” (p. 7) becoming “educated in a modern world” and acting as a “good citizen” (p. 9).

Even though the approaches vary, many accounts of critical thinking point to one core feature as essential: The ability to judge the validity and tenability of a claim, that is, to determine whether a claim is to be considered true. Dewey proposes the following paradigmatic definition of reflective thinking: “active, persistent and careful consideration of any belief or supposed form of knowledge in the light of the grounds that support it and the further conclusions toward which it tends” (Dewey, 1909/1997). This definition was echoed and broadened in a consensus report from the American Philosophical Association: “Critical thinking [is] purposeful, self-regulatory judgment which results in interpretation, analysis, evaluation, and inference, as well as explanation of the evidential, conceptual, methodological, criteriological, or contextual considerations upon which that judgment is based” (Facione, 1990). In line with these definitions, Robert Ennis, a leading scholar in the critical thinking movement, has formulated a brief definition of critical thinking as “reasonable, reflective thinking focused on deciding what to believe or do” (Ennis, 1991/2015). Ennis builds explicitly on Dewey’s work. Ennis’ first formulation of what he labeled “a streamlined conception for critical thinking” dates back to 1991. It has been influential in the field and might inform the search for an operational definition when researching online discussions.

The ability to evaluate whether a claim is justified and thus valid will be considered as a minimum conception of critical thinking throughout this article. Evaluating the validity of a claim may include several steps or procedures, including the following:

The list above is my synthesis of arguments from philosophers oriented toward informal logic, such as Dewey (1909/1997), Næss (1953/2005), and Toulmin (1958/2003). The list also echoes philosophical virtues, dating back to the ancient classics, including Plato and Aristoteles.

For the purpose of discussing cognitive presence as a tool to measure critical thinking, further elaboration will be considered excessive. The minimum conception proposes that a core feature of critical thinking is to identify and evaluate whether claims are supported by sufficient and relevant backing. This is in line with Dewey’s conception of reflective thinking and certainly makes it relevant in the discussion of the CoI framework, which claims to be consistent with Dewey’s account of inquiry and thinking.

Even if one accepts this minimum conception of critical thinking, further vagueness and controversies remain. These include questions about whether critical thinking comprises only skills and abilities or whether attitudes and dispositions toward using such abilities should also be included (Facione, 1990); whether critical thinking can be understood as a generic capacity or whether it is best accounted for as sets of domain-specific capacities (Moore, 2011b); and whether critical thinking should be understood as a formal and epistemic discipline or whether true critical thinking also includes a societal, activist stance (Davies & Barnett, 2015). One source of the fuzziness about how the concept of critical thinking should be understood is that it is investigated from both empirical/descriptive and philosophical/normative angles, and these perspectives have informed each other only to a minor degree (Hyytinen, 2015).

Central to the conceptualization of critical thinking in the cognitive presence construct is the practical inquiry model (Garrison, 2011; Garrison et al., 2001; Swan, Garrison, & Richardson, 2009). The model aims toward integrating the following aspects of thinking: perception and reflection, social and private modes of thinking, induction (arrival at generalizations) and deduction (employment of generalizations), intuition and creativity, and action and deliberation. The practical inquiry model accounts for these aspects as progress through inquiry cycles of the phases included below in Table 1.

Table 1. Cognitive Presence as Phases of Inquiry Process. Based on Garrison (2011) and Shea (2010).

Phase |

Indicators |

Triggering Event |

Recognizing the problem |

Exploration |

Information exchange |

Integration |

Connecting ideas |

Resolution |

Applying solutions |

These phases form the basis for a coding scheme that enables interpretation and categorization of messages in online discussions that detect which inquiry phase they represent—and thereby the level of critical thinking. All phases of an inquiry process are required in order to achieve high levels of critical thinking. A discussion that reaches a tested solution represents a more advanced level of critical thinking than a discussion that hovers around identifying and understanding a problem. The idea that “deep and meaningful learning does not occur until students move to integration and resolution stages” (Shea et al., 2010, p. 15) is crucial. This way of coding messages makes it possible to classify which level of critical thinking and cognitive presence a discussion as a whole represents. Since all phases of a discussion are essential, there is no option of assessing the quality of a single message. The coding scheme was initially developed in an article by Garrison et al. (1999). A more elaborated and detailed version is found in Shea (2010, p. 20). Similar coding schemes have been developed for the constructs of social presence and teaching presence. A typical research aim has been to investigate relations between cognitive presence and the other constructs.

Arbaugh et al. (2008) have developed a survey instrument based on the CoI framework in order to measure students’ conceptions of the different presences in the model and to conduct studies with a multi-institutional scope. The instrument consists of 34 propositions that respondents grade from “strongly disagree” to “strongly agree.” Twelve of the questions address cognitive presence and assess how respondents perceive the phases of an inquiry process in their educational experience.FN1 Several studies have reported that the instrument is valid in the sense that correlation between the different measured presences occurs as expected (Horzum & Uyanik, 2015; Kozan & Richardson, 2014). In this article, I raise a question about the validity of measures of cognitive presences, even though the measured levels seem to co-variate reasonably with social and teaching presences.

Due to the wide reception of the CoI framework, both critiques and suggestions for further developments have been published. In order to demonstrate that cognitive presence as progress through phases remains a stable and defining idea in the CoI framework, the next paragraphs briefly review some of these critique and developments. In a critical review, Rourke and Kanuka (2009) focused on the CoI framework’s attempt to promote and research deep and meaningful learning as well as empirical findings showing that integration and solution phases are rarely reached. They argued that the CoI framework has failed as a research strategy by lacking an appropriate and construct-valid tool to measure deep and meaningful learning. Further, they argued that the CoI model fails as a strategy to promote deep and meaningful learning, and they suggested alternative strategies to enhance deep learning based on assessment, reduced content, and confronting misconceptions. Akyol et al. (2009) responded to the critique, partly by dismissing the methodology of the review and partly by insisting that the CoI framework focuses on learning processes, not learning results. Jézégou (2010) criticized the CoI framework for weak and unclear theoretical foundations. Garrison (2013) responded by explicating constructivism as the theoretical background for the CoI framework and announcing metacognition as a supplemental construct in the model. Xin (2012) suggested that many utterances in a discussion contain social, teaching, and cognitive functions at the same time. This challenges the idea of separating such presences. Nevertheless, few critics of the CoI framework have questioned whether cognitive presence and thereby critical thinking is adequately operationalized and assessed as progress through the phases of inquiry.

Recently, supplements to the CoI model have been suggested. As mentioned above, one is the construct of metacognition, which focuses on one’s own and peers’ knowledge, monitoring, and reflection of cognition, and claims that self-corrective strategies are integral to inquiry (Akyol & Garrison, 2011; Garrison & Akyol, 2015). Another recent suggestion is to supplement the model with the construct of learning presence (Shea & Bidjerano, 2010; Shea et al., 2012; Shea et al., 2014). This construct emphasizes the value of self- and co-regulation and monitoring of learning. Although there are clear similarities between these suggested new constructs, there has been some controversy among their proponents. Both groups assert that the goal of the educational process is cognitive presence, understood and measured as progress through the phases of inquiry. Redmond (2014) suggested a similar idea by claiming that reflection (understood as self- and co-reflection and awareness along with regulation of cognition) is definitive for cognitive presence and can be included in the established coding scheme. Despite these critiques and developments, cognitive presence operationalized as progress through phases has remained a stable element of the CoI framework.

The CoI framework has several theoretical foundations. The main inspiration for the CoI framework is Dewey’s thoughts on education and thinking and the relation between them (Garrison et al., 1999; Garrison, 2011; Swan et al., 2009). One of Dewey’s central motives (Dewey, 1909/1997, 1920/1971, 1933/1986) was a rejection of what he called “false dualisms.” Traditional epistemologies tend to set up world and mind, thinking and action, and the individual and the social as contrasts that shape the way we conceptualize the world. Dewey aimed at a naturalistic account of thinking and knowing by putting knowledge and the knowledge-seeking subject back into their surroundings (Rorty, 1986). Thinking should not be understood as an inner process within a solitary subject disengaged from the world, but as toil to overcome and cope with problems in the world. In this view, thinking is closely related to problem solving. According to this view, an inquiry process is typically initiated when a problem occurs as a situation where the actor’s established responses to the environment are inadequate. Latter phases in the process include elaboration and testing of hypothetical solutions. Dewey took the scientific method, and especially Darwin’s contributions to biology, as ideals for investigation, but he pointed out that there are no essential differences between everyday reasoning and scientific reasoning. What characterizes scientific reasoning is a higher degree of precision and abstraction. For all kinds of reasoning, conclusions rely on testing in the real world rather than on speculative evaluation. Further, the development of reflection and reasoning is an inherent goal for education, which is more crucial than teaching students to memorize facts.

Lipman (Lipman, 1991/2003; Lipman, Sharp, & Oscanyan, 1980) introduced “community of inquiry” as an educational concept, emphasizing that dialogues are important for education. Originally, the concept was introduced by Peirce, a pragmatist philosopher and predecessor of Dewey. According to Peirce, the concept illustrates that scientific inquiry normally is conducted in collaboration with colleagues and peers. Criticizing and defending assumptions and hypotheses in a community of peers is thus the primordial mode of inquiry. Inspired by Dewey, Lipman (1991/2003) developed the concept of community of inquiry into an educational concept. According to Lipman, education should stimulate students’ ability to reflect. Furthermore, Lipman acknowledged that dialogues performed in a community of peers strengthen students’ reasoning and critical thinking abilities. In line with this, Lipman, Sharp, and Oscanyan (1980) have developed a program for developing pupils’ reflective reasoning by dialogical means, which is labeled Philosophy for Children (sometimes abbreviated as P4C). The concept of community of inquiry is commonly associated with the movement for promoting philosophy with children.

Another tradition that has inspired the CoI framework focuses on the concept of deep learning, as opposed to surface learning (Biggs & Tang, 2007; Marton & Säljö, 1976a, 1976b). The concept of deep learning connects cognitive presence with educational rationales, such as higher-order thinking and critical thinking.

Other than Lipman’s influence, references to the general literature on critical thinking in education are scarce in the research literature on the CoI framework. Ideas from researchers like Ennis (1989), Siegel (1988/2013), and Toulmin (1958/2003) might have contributed to a broader conception of critical thinking.

The element from Dewey pursued in the cognitive presence coding scheme is the idea that inquiry proceeds through defined phases. In Dewey’s account, progress in inquiry is used to illustrate how thinking (i.e., problem solving and inquiry) is embedded in practical contexts and is a response to obstacles to actors’ enterprises in their surrounding.FN2 This description of how thinking, and thus inquiry, emerges as a response to obstacles in the surroundings is taken as an account of the quality of the process. One might ask if this represents confusion between a description of how thinking is initiated and an evaluation of how advanced or successful the thinking is.

Proponents of the CoI model emphasize that Dewey’s insights show the importance of dialogue and community to thinking, inquiry, and education. However, the cognitive presence construct does not necessarily highlight the social aspect of thinking. One might picture an individual in solitude progressing through the phases of inquiry. Progress through phases of inquiry as an operationalization of critical thinking and interpretation of Dewey’s philosophy does not emphasize the social and dialogical aspect of thinking.

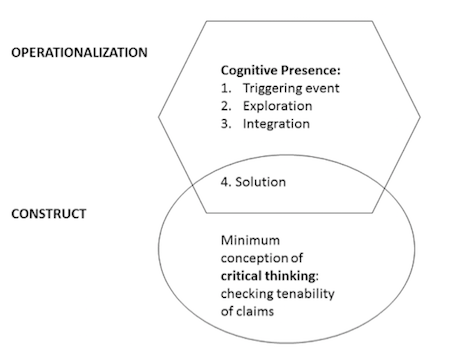

Construct validity concerns whether an operationalization or coding scheme measures what it intends to measure. Cognitive presence in the CoI framework is meant to be closely related to critical thinking. As previously described, a minimum conception of critical thinking highlights checking of claims tenability, and the cognitive presence construct operationalizes critical thinking as progress through phases of inquiry. How does progress through phases resemble checking the tenability of claims?

One answer to this question is not very well! Progress from the triggering event via exploration toward the integration may take place without any investigation of how well relevant claims and arguments are supported. The indicators that are associated with these phases, like “sense of puzzlement,” “information exchange,” and “connecting ideas” (see Table 1), do not necessarily imply any checking of the claims’ tenability. Indicators used to describe the solution phase, like “testing solutions” and “defending solutions,” may imply the evaluation of tenability.

Figure 3 illustrates that there is limited necessary overlap between the cognitive presence coding scheme and the minimum conception of critical thinking. Critical thinking may take place independently of progress through predefined phases as a necessary characteristic, indicating that the operationalization underrepresents what it aims to measure. Similarly, problem solving through predefined phases of inquiry may take place without any critical thinking necessarily taking place, thus indicating the irrelevance of the operationalization. It is important to note the term necessary in the description above. It may happen that critical thinking actually takes place during progress through inquiry phases. However, such occasional connection between critical thinking and progress through inquiry phases is not necessary or sufficient to describe attributes of either critical thinking or progress through inquiry phases. This indicates that the coding scheme is both construct irrelevant and construct under-representative, which implies weak construct validity for the cognitive coding scheme.

|

| Figure 3: Construct validity of cognitive presence coding scheme as a measure of critical thinking. Concordance between checking tenability of claims and progress through phases of inquiry. |

Critical thinking may be defined by other characteristics than those suggested in my minimum conception, i.e., evaluation of claims’ tenability. Alternative characteristics of critical thinking might be precision, fallibilism, perspective awareness, intellectual integrity, un-tendentiousness, or ability to draw logical valid inferences. Defining critical thinking by such virtues will lead to similar conclusions regarding the construct validity of progress through phases as a measure of critical thinking. Progress toward a solution as an indicator for the level of critical thinking implies that thoroughness and meticulousness are less desired. Focusing on reaching a solution may imply that the framework emphasizes aspects of a discussion that do not necessarily represent critical thinking.

A construct-valid coding scheme for assessing critical thinking should have the capacity to distinguish discussions with high levels of critical thinking from discussions with low levels of critical thinking. It is questionable whether the coding scheme based on cognitive presence has this capacity. Discussions that do not reach a solution but that concern complex problems would be rated as showing lower levels of critical thinking than discussions in which (even wrong) solutions are reached to less demanding questions. Overvaluing solutions to intellectual problems might favor a less thoughtful approach or reward solutions even when they rely on poor understanding, weak evidence, unclear concepts, and so forth.

As previously mentioned, a common finding in reviews of CoI research is that online educational discussions rarely reach phases of integration and resolution, but instead tend to hover around the initial phases of the inquiry process (Garrison et al., 2001; Garrison, 2011; Kanuka & Anderson, 1998; Rourke & Kanuka, 2009; Zydney, deNoyelles, & Kyeong-Ju Seo, 2012). In keeping with the idea that deep and meaningful learning takes place when students reach the integration and resolution phases, several explanations might be possible (Garrison et al., 2001; Rourke & Anderson, 2004).

One way of explaining low incidences of discussion posts in the integration and resolution phases is to presume that online discussions fail as a strategy to promote cognitive presence and thereby critical thinking (Rourke & Kanuka, 2007, 2009). Another way of explaining the measured low levels of cognitive presence is to interpret it as a result of unsatisfactory levels of teaching presence, and thereby a result of weak facilitation. Discussion tasks designed with clear expectations about outcomes and facilitation that guides discussion forward, might drive discussions toward the resolution phase (Garrison, 2011; Swan et al., 2009).

Yet another strategy is to expect higher levels of cognitive presence to occur in media and learning activities other than online discussion:

These more abstract phases of knowledge construction will not be most evident in student interactive discourse (threaded discussions) but should, instead, be evident in activities designed to allow for their demonstration, such as integrative papers, projects, case studies and the like (Shea, 2010, Section 4.4).

According to this view, online discussions do not fulfill their intended purpose; however, low observed levels of cognitive presence are explained within the CoI framework.

The previous discussion of the construct validity of the cognitive presence measure suggests that low observed levels of cognitive presence might also be a result of the tool’s weak ability to measure critical thinking. This implies that it is impossible to know whether the low observed levels of cognitive presence could be explained as weaknesses in online discussions as an arena that fosters critical thinking, weaknesses in facilitation, or as weaknesses in the measurement tool (Rourke & Anderson, 2004).

The above discussion has addressed how the cognitive presence construct measures critical thinking. To sum up, first, the cognitive presence measurement tool takes Dewey’s description of how thinking and inquiry are initiated as responses to obstacles in the surroundings—following certain steps of problem solving—as an account of what critical thinking is. This way of reading Dewey does not distinguish between how thinking, inquiry, and problem solving are initiated and what good critical thinking is. An alternative approach, based on Dewey (1909/1997) and pursued by Ennis (1991/2015), is to consider critical thinking as the evaluation of a claim’s tenability.

Second, the cognitive presence measurement tool does not target discussants’ evaluation of a claim’s tenability as the key feature of critical thinking. Compared to a minimum conception of critical thinking that takes “deciding what to believe” as a hallmark, the coding scheme has weak construct validity, and the operationalized indicators—progress through phases of inquiry—might be considered both irrelevant and unrepresentative.

Third, empirical studies based on a cognitive presence measurement tool show that high levels of cognitive presence are rarely found in online educational discussions. Rather than initiating investigations about the validity of the coding schemes, such findings are explained by ad hoc hypotheses, such as explaining low observed levels of cognitive presence as a result of weak facilitation or expecting that students will express higher levels of cognitive presence in other learning activities.

The CoI framework and the cognitive presence coding scheme are frequently applied in empirical research (Gašević, Adesope, Joksimović, & Kovanović, 2015; Weltzer-Ward, 2011). Do the wide acceptance of the framework and the operationalization of cognitive presence indicate that the argument presented in this article is erroneous? At least two objections regarding the soundness of this article’s claims may be raised. First, is it really critical thinking that the CoI framework aims to measure with the cognitive presence construct? Second, even if the cognitive presence construct does not describe critical thinking (as defined here), cognitive presence might define and measure other important educational rationales.

In the initial and seminal articles by Garrison et al. (1999, 2001), it is made quite clear that cognitive presence is proposed as an operationalization of critical thinking: “cognitive presence as assessed and defined by the phases [represent] a generalized model of critical thinking” (Garrison et al., 2001, p. 8). Moreover, these articles discussed established characteristics of critical thinking (accuracy, logic, etc.) and rejected these as being too “algorithmic” to be a suitable strategy to operationalize critical thinking (Garrison et al., 2001, p. 12). Instead, the founders of the CoI framework wanted to focus on discussants that “demand reason for beliefs [… in a] self-judging community” (Garrison et al., 2001, p. 12). The claim in this article is that such a conception of critical thinking is not measured by the cognitive presence indicators in a manner that upholds construct validity. Such an approach is described by what I have called a minimum conception of critical thinking. Operationalizations of discussants’ demands of reasons for beliefs—based on informal logic (Toulmin, 1958/2003), as opposed to “algorithmic” approaches to cognition—are proposed in the research field in several frameworks (Clark, Sampson, Weinberger, & Erkens, 2007; Noroozi, Weinberger, Biemans, Mulder, & Chizari, 2012; Weinberger & Fischer, 2006) and may represent an alternative approach to measuring cognitive presence.

What other kinds of educational rationales do the cognitive presence indicators possibly measure, if not critical thinking? Problem solving is commonly associated and shares a number of overarching themes with critical thinking (McCormick, Clark, & Raines, 2015). The CoI literature mentions deep and meaningful learning (Biggs & Tang, 2007) as an associated educational rationale. Higher-order knowledge acquisition is also a candidate (Anderson & Krathwohl, 2001). One might also expect that high levels of cognitive presence coincide with student engagement (Kahu, 2013; Kuh, 2009), cognitive engagement, and students spending time on task rather than drifting into distractions. Nevertheless, a number of taxonomies and tools have been developed to measure such educational rationales and it has not been established that cognitive presence measured as progress through phases of inquiry represents a construct-valid measurement for any of them.

The argument in this article is addressed primarily toward the research community. If the argument is found persuasive, it may spark some debate in parts of the community of inquirers on collaboration and critical thinking in digital educational settings. The argument may be refuted with an adequate explication of the validity of cognitive presence, or it may stimulate further development of the CoI framework.

Other possible implications concern how to guide and facilitate educational discussions. If critical thinking is more profoundly accounted for as checking a claim’s tenability than as progression toward a solution, this might influence instructions and facilitation of discussions.

Akyol, Z., Arbaugh, J. B., Cleveland-Innes, M., Garrison, D. R., Ice, P., Richardson, J. C., & Swan, K. (2009). A response to the review of the Community of Inquiry framework. Journal of Distance Education, 23(2), 123-135.

Akyol, Z., & Garrison, D. R. (2011). Assessing metacognition in an online community of inquiry. The Internet and Higher Education, 14(3), 183-190. doi:http://dx.doi.org/10.1016/j.iheduc.2011.01.005

Anderson, L. W., & Krathwohl, D. R. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom's taxonomy of educational objectives. New York: Longman.

Arbaugh, J. B., Cleveland-Innes, M., Diaz, S. R., Garrison, D. R., Ice, P., Richardson, J. C., & Swan, K. P. (2008). Developing a Community of Inquiry instrument: Testing a measure of the Community of Inquiry framework using a multi-institutional sample. The Internet and Higher Education, 11(3-4), 133-136. doi:http://dx.doi.org/10.1016/j.iheduc.2008.06.003

Bailin, S., & Siegel, H. (2002). Critical thinking. In N. Blake, P. Smyers, R. Smith, & P. Standish (Eds.), The Blackwell guide to the philosophy of education. Blackwell Publishing.

Biggs, J. B., & Tang, C. S.-k. (2007). Teaching for quality learning at university: What the student does. Maidenhead: McGraw-Hill/Society for Research into Higher Education & Open University Press.

Cho, K.-L., & Jonassen, D. H. (2002). The effects of argumentation scaffolds on argumentation and problem solving. Educational Technology Research and Development, 50(3), 5-22. doi:10.1007/bf02505022

Clark, D., Sampson, V., Weinberger, A., & Erkens, G. (2007). Analytic frameworks for assessing dialogic argumentation in online learning environments. Educational Psychology Review, 19(3), 343-374. doi:10.1007/s10648-007-9050-7

Davies, M., & Barnett, R. (2015). Introduction. In M. Davies & R. Barnett (Eds.), The Palgrave handbook of critical thinking in higher education.

De Wever, B., Schellens, T., Valcke, M., & Van Keer, H. (2006). Content analysis schemes to analyze transcripts of online asynchronous discussion groups: A review. Computers & Education, 46(1), 6-28. doi: http://dx.doi.org/10.1016/j.compedu.2005.04.005

Dewey, J. (1909/1997). How we think. Mineola, N.Y.: Dover Publ.

Dewey, J. (1920/1971). Reconstruction in philosophy. Boston: Beacon Press.

Dewey, J. (1933/1986). How we think (rev. ed.) The later works: 1925–1953: Vol. 8: 1933. Carbondale, Ill: Southern Illinois University Press.

Dewey, J. (2007). Democracy and education: An introduction to the philosophy of education. Sioux Falls, SD: NuVision.

Ennis, R. H. (1989). Critical thinking and subject specificity: Clarification and needed research. Educational Researcher, 18(3), 4–10.

Ennis, R. H. (1991/2015). Critical thinking: A streamlined conception. In M. Davies & R. Barnett (Eds.), The Palgrave handbook of critical thinking in higher education (pp. 31-47). New York: Palgrave Macmillan US.

Facione, P. A. (1990). Critical thinking: A statement of expert consensus for purposes of educational assessment and instruction. Research Findings and Recommendations.

Garrison, D. R., Anderson, T., & Archer, W. (1999). Critical inquiry in a text-based environment: Computer conferencing in higher education. The Internet and Higher Education, 2(2-3), 87-105. doi:10.1016/s1096-7516(00)00016-6

Garrison, D. R., Anderson, T., & Archer, W. (2001). Critical thinking, cognitive presence, and computer conferencing in distance education. American Journal of Distance Education, 15(1), 7-23.

Garrison, D. R. (2007). Online community of inquiry review: Social, cognitive, and teaching presence issues. Journal of Asynchronous Learning Networks, 11(1), 61-72.

Garrison, D. R. (2011). E-learning in the 21st century. New York: Routledge.

Garrison, D. R. (2013). Theoretical foundations and epistemological insights of the Community of Inquiry. In Z. Akyol & D. R. Garrison (Eds.), Educational communities of inquiry theoretical framework, research and practice. Hershey: Information Science Reference, IGI Global.

Garrison, D. R., & Akyol, Z. (2015). Toward the development of a metacognition construct for communities of inquiry. The Internet and Higher Education, 24, 66-71. doi:http://dx.doi.org/10.1016/j.iheduc.2014.10.001

Garrison, D. R., Anderson, T., & Archer, W. (2010). The first decade of the Community of Inquiry framework: A retrospective. The Internet and Higher Education, 13(1), 5-9.

Garrison, D. R., Cleveland-Innes, M., Koole, M., & Kappelman, J. (2006). Revisiting methodological issues in transcript analysis: Negotiated coding and reliability. The Internet and Higher Education, 9(1), 1–8. doi:http://dx.doi.org/10.1016/j.iheduc.2005.11.001

Gašević, D., Adesope, O., Joksimović, S., & Kovanović, V. (2015). Externally facilitated regulation scaffolding and role assignment to develop cognitive presence in asynchronous online discussions. The Internet and Higher Education, 24, 53-65. doi: http://dx.doi.org/10.1016/j.iheduc.2014.09.006

Grice, P. (1989). Studies in the way of words. Cambridge, MA: Harvard University Press.

Habermas, J., & Habermas, J. (1987). The theory of communicative action: 2: Lifeworld and system: A critique of functionalist reason. London: Heinemann.

Ho, C.-H., & Swan, K. (2007). Evaluating online conversation in an asynchronous learning environment: An application of Grice's Cooperative Principle. The Internet and Higher Education, 10(1), 3–14. doi: http://dx.doi.org/10.1016/j.iheduc.2006.11.002

Horzum, M. B., & Uyanik, G. K. (2015). An item response theory analysis of the Community of Inquiry Scale. The International Review of Research in Open and Distributed Learning, 16(2).

Hyytinen, H. (2015). Looking beyond the obvious: Theoretical, empirical and methodological insights into critical thinking. PhD thesis delivered to University of Helsinki.

Jézégou, A. (2010). Community of Inquiry in e-learning: A critical analysis of the Garrison and Anderson model. Journal of Distance Education, 24(3), 18–27.

Kahu, E. R. (2013). Framing student engagement in higher education. Studies in Higher Education, 38(5), 758–773. doi: 10.1080/03075079.2011.598505

Kanuka, H., & Anderson, T. (1998). Online social interchange, discord, and knowledge construction. Journal of Distance Education, 13(1).

Kleven, T. A. (2008). Validity and validation in qualitative and quantitative research. Nordic Studies in Education, 28(03).

Kovanović, V., Gašević, D., Joksimović, S., Hatala, M., & Adesope, O. (2015). Analytics of Communities of Inquiry: Effects of learning technology use on cognitive presence in asynchronous online discussions. The Internet and Higher Education, 27, 74-89. doi: http://dx.doi.org/10.1016/j.iheduc.2015.06.002

Kozan, K., & Richardson, J. C. (2014). New exploratory and confirmatory factor analysis insights into the Community of Inquiry Survey. The Internet and Higher Education, 23, 39-47. doi: http://dx.doi.org/10.1016/j.iheduc.2014.06.002

Kuh, G. D. (2009). What student affairs professionals need to know about student engagement. Journal of College Student Development, 50(6), 683–706. doi: 10.1353/csd.0.0099

Lee, S.-M. (2014). The relationships between higher order thinking skills, cognitive density, and social presence in online learning. The Internet and Higher Education, 21, 41–52. doi: http://dx.doi.org/10.1016/j.iheduc.2013.12.002

Lipman, M. (1991/2003). Thinking in education (2nd ed.). Cambridge: Cambridge University Press.

Lipman, M., Sharp, A. M., & Oscanyan, F. S. (1980). Philosophy in the classroom. Philadelphia: Temple University Press.

Marton, F., & Säljö, R. (1976a). On qualitative differences in learning: I: Outcome and process. British Journal of Educational Psychology, 46(1), 4–11. doi: 10.1111/j.2044-8279.1976.tb02980.x

Marton, F., & Säljö, R. (1976b). On qualtitative differences in learning – II: Outcome as a function of the learner's conception of the task. British Journal of Educational Psychology, 46(2), 115-127. doi: 10.1111/j.2044-8279.1976.tb02304.x

McCormick, N. J., Clark, L. M., & Raines, J. M. (2015). Engaging students in critical thinking and problem solving: A brief review of the literature. Journal of Studies in Education, 5(4), 100-113.

Messick, S. (1995). Validity of psychological assessment: Validation of inferences from persons' responses and performances as scientific inquiry into score meaning. American Psychologist, 50(9), 741-749. doi: 10.1037/0003-066X.50.9.741

Moore, T. (2011). Critical thinking: Seven definitions in search of a concept. Studies in Higher Education, 38(4), 506–522. doi: 10.1080/03075079.2011.586995

Moore, T. J. (2011). Critical thinking and disciplinary thinking: A continuing debate. Higher Education Research & Development, 30(3), 261-274. doi: 10.1080/07294360.2010.501328

Noroozi, O., Weinberger, A., Biemans, H. J. A., Mulder, M., & Chizari, M. (2012). Argumentation-based computer supported collaborative learning (ABCSCL): A synthesis of 15 years of research. Educational Research Review, 7(2), 79-106. doi: http://dx.doi.org/10.1016/j.edurev.2011.11.006

Noroozi, O., Weinberger, A., Biemans, H. J. A., Mulder, M., & Chizari, M. (2013). Facilitating argumentative knowledge construction through a transactive discussion script in CSCL. Computers & Education, 61(0), 59–76. doi: http://dx.doi.org/10.1016/j.compedu.2012.08.013

Næss, A. (1953/2005). The selected works of Arne Naess: Vol. 1: Interpretation and preciseness: A contribution to the theory of communication. Dordrecht: Springer.

Poce, A., Corcione, L., & Iovine, A. (2012). Content analysis and critical thinking. An assessment study. Cadmo.

Redmond, P. (2014). Reflection as an indicator of cognitive presence. E-Learning and Digital Media, 11(1), 46-58.

Rorty, R. (1986). Introduction. In Dewey: The later works: 1925–1953: Vol. 8: 1933. Carbondale, Ill: Southern Illinois University Press.

Rourke, L., & Anderson, T. (2004). Validity in quantitative content analysis. Educational Technology Research and Development, 52(1), 5-18. doi: 10.1007/bf02504769

Rourke, L., & Kanuka, H. (2007). Barriers to online critical discourse. International Journal of Computer-Supported Collaborative Learning, 2(1), 105–126. doi: 10.1007/s11412-007-9007-3

Rourke, L., & Kanuka, H. (2009). Learning in Communities of Inquiry: A review of the literature. Journal of Distance Education, 23(1), 19-48.

Shea, P. (2010). A re-examination of the Community of Inquiry framework: Social network and content analysis. The Internet and Higher Education, 13(1), 10.

Shea, P., & Bidjerano, T. (2010). Learning presence: Towards a theory of self-efficacy, self-regulation, and the development of a Communities of Inquiry in online and blended learning environments. Computers & Education, 55(4), 1721–1731. doi: http://dx.doi.org/10.1016/j.compedu.2010.07.017

Shea, P., Hayes, S., Smith, S. U., Vickers, J., Bidjerano, T., Pickett, A., . . . Jian, S. (2012). Learning presence: Additional research on a new conceptual element within the Community of Inquiry (Coi) framework. The Internet and Higher Education, 15(2), 89–95. doi: http://dx.doi.org/10.1016/j.iheduc.2011.08.002

Shea, P., Hayes, S., Uzuner-Smith, S., Gozza-Cohen, M., Vickers, J., & Bidjerano, T. (2014). Reconceptualizing the Community of Inquiry framework: An exploratory analysis. The Internet and Higher Education, 23(0), 9-17. doi: http://dx.doi.org/10.1016/j.iheduc.2014.05.002

Shea, P., Hayes, S., Vickers, J., Gozza-Cohen, M., Uzuner, S., Mehta, R., . . . Rangan, P. (2010). A re-examination of the Community of Inquiry framework: Social network and content analysis. The Internet and Higher Education, 13(1–2), 10–21. doi: http://dx.doi.org/10.1016/j.iheduc.2009.11.002

Siegel, H. (1988/2013). Educating reason. Routledge.

Siegel, H. (2012). Education as initiation into the space of reasons. Theory and Research in Education, 10(2), 191–202. doi: 10.1177/1477878512446542

Stegmann, K., Wecker, C., Weinberger, A., & Fischer, F. (2011). Collaborative argumentation and cognitive elaboration in a computer-supported collaborative learning environment. Instructional Science, 40(2), 297–323. doi: 10.1007/s11251-011-9174-5

Swan, K., Garrison, D., & Richardson, J. (2009). A constructivist approach to online learning: The Community of Inquiry framework. Information technology and constructivism in higher education: Progressive learning frameworks. Hershey, PA: IGI Global.

Toulmin, S. (1958/2003). The uses of argument. Cambridge: Cambridge University Press.

Weinberger, A., & Fischer, F. (2006). A framework to analyze argumentative knowledge construction in computer-supported collaborative learning. Computers & Education, 46(1), 71–95. doi: http://dx.doi.org/10.1016/j.compedu.2005.04.003

Weltzer-Ward, L. (2011). Content analysis coding schemes for online asynchronous discussion. Campus-Wide Information Systems, 28(1), 56-74.

Xin, C. (2012). A critique of the Community of Inquiry framework. International Journal of E-Learning & Distance Education, 26(1).

Jens Breivik, Center for Teaching, Learning and Technology, UiT - The Arctic University of Norway. E-mail: jens.breivik@gmail.com