VOL. 32, No. 2 2016

Designers have a limited selection of K-12 online course creation standards to choose from that are not blocked behind proprietary or pay walls. For numerous institutions and states, the use of the iNACOL National Standards for Quality Online Courses is becoming a widely used resource. This article presents the final phase in a three-part study to test the validity and reliability of the iNACOL standards specifically to online course design. Phase three was a field test of the revised rubric based on the iNACOL standards against current K-12 online courses. While the results show a strong exact match percentage, there is more work to be done with the revised rubric.

Les concepteurs ont une sélection limitée des normes K-12 de création de cours en ligne à choisir qui ne sont pas bloqués derrière des propriétés exclusives ou des péages informatiques. Pour de nombreuses institutions et états, l'utilisation des Normes nationales pour les cours en ligne de qualité iNACOL devient une ressource largement utilisée. Cet article présente la phase finale d’une étude en trois parties pour tester la validité et la fiabilité des normes iNACOL spécifiquement liées à la conception de cours en ligne. La phase trois était une mise à l’essai sur le terrain de la rubrique révisée établie en fonction des normes iNACOL par rapport aux cours en ligne K-12 actuels. Bien que les résultats montrent un fort pourcentage de correspondance exacte, il y a plus de travail à faire avec la rubrique révisée.

This work is licensed under a Creative Commons Attribution 3.0 Unported License.

The use of online courses in the K-12 environment continues to grow, with supplemental online course enrollments at roughly 4.5 million in the United States alone (Gemin, Pape, Vashaw, & Watson, 2015). This influx of online courses into the United States education system has led to a realization of the differences between traditional and virtual environments. These differences would include the issue of the design of asynchronous course content. However, what is somewhat surprising is that the research into this critical aspect of K-12 online learning has been both minimal (Barbour, 2013; Barbour & Adelstein, 2013a), and narrow in scope, mainly focusing on specific schools (Barbour, Morrison, & Adelstein, 2014; Friend & Johnston, 2005; Zucker & Kozma, 2003).

There are current foundations and associations, such as the Michigan Virtual Learning Research Institute (MVLRI), that have taken up the task of researching further into course design. For example, since 2013 the MVLRI has included recommendations into educational delivery models and instructional design standards in their yearly directives for the Michigan Legislature (MVLRI, 2016). To date, the recommendations made by the MVLRI have focused primarily on the International Association for K-12 Online Learning’s (iNACOL) (2011) National Standards for Quality Online Courses. At present, the iNACOL online course design standards are one of the most popular, non-proprietary and publically available standards – both in the United States and internationally. Yet, the iNACOL standardswere not developed using a traditional process that examines the validity and reliability of the standards and any instruments (i.e., rubrics) designed to measure those standards (Barbour, 2013; Barbour & Adelstein, 2013b; Molnar, Rice, Huerta, Shafer, Barbour, Miron, Gulosino, & Horvitz, 2014).

The following article outlines the third, and final phase, of a research study designed to begin the process of examining the iNACOL online course design standards for validity and reliability. The first phase of research of this study provided a cursory review of the iNACOL standards to determine the level of support for each of the standard elements within the K-12 online learning literature, as well as the broader online learning literature (see Adelstein & Barbour, 2016). During the second phase of this research study, two panels comprising eight experts from a variety of sectors in the field of K-12 online learning, examined the standards based on the outcome of phase one over a cycle of three rounds of review (see Adelstein & Barbour, in press). This second phase generated a revised list of specific design standards, as well as a revised rubric. In this article we describe the third phase of this research study, where four groups of two reviewers applied the phase two revised rubric using current K-12 online courses to examine the instrument for inter-rater reliability.

As indicated above, the research focused on K-12 online course design has been sparse. This can possibly be attributed to the idea that online course design has not been stressed in teacher professional development (Dawley, Rice, & Hinck, 2010; Rice & Dawley, 2007; Rice, Dawley, Gasell, & Florez, 2008). While it has been suggested that design should be a completely separate role from the classroom instructor (Davis, Roblyer, Charania, Ferdig, Harms, Compton, & Cho, 2007), this notion has only been promoted in a handful of models. For example, the Teacher Education Goes Virtual Schooling1 and Supporting K-12 Online Learning in Michigan2 programs focused primarily on the role of the online learning facilitator, while the Iowa Learning Online3 and Michigan Online Teaching Case Studies4 initiatives focused on the role of the online teacher. However, there are several design trends that can be gleaned from the available literature. The release of a variety of general design standards, practitioner- and advocacy-generated literature, and limited research, provide initial suggested guidance in online course design with enough commonalities to help form a larger picture, albeit one that is completed in broad strokes.

The first theme in the literature focused on keeping navigation simple. The design of the course should be formatted in a way that allows for intuitive, easy navigation of the site. For example, course designers from the Centre for Distance Learning and Innovation (CDLI) used a template providing a consistency, so that it “doesn’t frighten the kids with a different navigation menu on every screen” (Barbour, 2007a, p. 102). To add to the understanding, it was recommended that designers give students a tour of the course, explaining how the virtual classroom is organized (Elbaum, McIntyre, & Smith, 2002). When used by VHS, the majority of students agreed that the orientation gave them the comfort level to successfully navigate a course (Zucker & Kozma, 2003). This was also found to be important for students with special needs, as consistent navigation patterns curbed frustration (Keeler & Horney, 2007). One of the positive aspects of courses with clarity and simplicity was that it not only worked for students with disabilities, but was also appropriate for all users (Keeler, Richter, Anderson-Inman, Horney, & Ditson, 2007). It was noted that a simplistic, linear approach should not necessarily bleed into content delivery, as a variety of activities allows for a more interesting course, as well as tapping into different student learning styles (Barbour 2007a; Elbaum, McIntyre, & Smith, 2002; Barbour & Cooze, 2004).

The second theme focused on less text and more visuals where appropriate. The use of a visual over text can offer advantages to students enrolled in an online course. The perception from educators that students ignore text-heavy sites plays into the notion that online courses are, and should be, presented differently than traditional courses (Barbour, 2007a). Online information may be presented in unique formats, and using solely text is akin to assigning a reading from the textbook (Barbour, 2005). It was therefore not surprising to see online educators ask for additional training so they could create and add multimedia into their courses (Barbour, Morrison, & Adelstein, 2014). Students agreed and indicated that they found visuals and multimedia, “really interesting and a lot better than sitting down and reading the book” (Barbour & Adelstein, 2013a, p. 60). A graphically intensive course also allows visual learners to flourish (Barbour & Cooze, 2004), as well as helping to provide structure to students with disabilities (Keeler et al., 2007). However, graphics should be used only when appropriate, and not just because they are readily available (Barbour, 2007; Elbaum, McIntyre, & Smith, 2002). Too many or over-stimulating visuals and backgrounds may distract students with attention deficit disorders (Keeler & Horney, 2007), which is why a mix of audio, text, and visuals is recommended.

The third theme focused on clear instructions. The nature of online courses, especially asynchronous courses, means that clear and detailed directions are needed to help move students along (Elbaum, McIntyre, & Smith, 2002). For example, Barbour (2007a) indicated that “the directions and the expectations [need to be] precise enough so students can work effectively on their own, not providing a roadblock for their time” (p. 104). Clarity was also a concern for students, who worried that online content, was not as straightforward as the textbook, or that it was not easily accessible (Barbour & Adelstein, 2013a). In fact, the notion of clarity was relevant enough for VHS to include it as one of the 19 standards used for their course review process. The standards asked designers to judge if, “the course is structured in such a way that organization of the course and use of medium are adequately explained and accommodating to the needs of students” (Yamashiro & Zucker, 1999, p. 57). The use of consistent, explicit expectations was also important for exceptional students to stay on track as well (Keeler et al., 2007). The idea is that clarity of expectations will remove instructions as a possible barrier for students, allowing the student and instructor to focus on the learning.

This leads into the final theme, which focused on providing feedback to students. Since the students do not have the ability to talk directly with the teacher in classroom as in a traditional course, it is important to provide frequent, reliable and predictable feedback (Elbaum, McIntyre, & Smith, 2002). As was the case with the previous suggestion, VHS reviewed courses with feedback in mind, checking that “the structure of the course encourages regular feedback” (Yamashiro & Zucker, 1999, p. 57). Feedback can be accomplished in a variety of ways, from self-assessments to built-in auto-graded exams found in certain learning management systems (Elbaum, McIntyre, & Smith, 2002). A self-assessment feature that gives instantaneous feedback, for example, was highly touted by online students (Barbour & Adelstein, 2013b), who appreciated knowing immediately if they were on the right track. Immediate feedback can be a beneficial formative assessment for students (Huett, Huett, & Ringlaben, 2011). Regardless of the form it takes, reliable feedback to students is vital to a course, as it keeps the students up-to-date on their progress and engaged in their learning (Elbaum, McIntyre, & Smith, 2002).

The four principles outlined are a small but important collection of common elements of K-12 course design literature. However, there is clearly more that should be taken into consideration for online delivery, which is the focus of the overall study. This article will focus on phase three, which looks to field test the revised rubric designed in phase two. The revised rubric contains elements determined to be vital by an expert panel in regards to specifically K-12 online course design.

Upon completion of phase one and two, which tested content validity through a comparison to the standards in the literature and then expert review, the third and final phase of this study examined the reliability of the rubric based on the revised iNACOL standards. When evaluating the rubric, it was important to test not just the validity, but the reliability as well (Taggart, Phifer, Nixon, & Wood, 2001). Further, Legon and Runyon (2007) noted that having instructors review online course design rubrics not only helped the instrument, but was also beneficial to the instructors. These instructors mentioned feeling stimulated and motivated to improve their own courses based on their learning from participation in the review process. Simply put, inter-rater reliability is a form of triangulation (Denzin, 1978), which is a method used to assess the accuracy of a specific point using different inputs.

Inter-rater reliability for pairs of reviewers using multiple responses can be determined in different ways, with kappa being one of the more popular methods. Initially, the kappa coefficient appeared the most appropriate, as it, “indicates whether two judges classify entities in a similar fashion” (Brennan & Hays, 2007, p. 155). However, as the data was reviewed, it became obvious that using kappa would be impossible to accomplish. Kappa cannot be calculated if a rater gives the same rating to what is being tested, as the rater changes from a variable to a constant. Since the study took the details of each specific element into account, there was an increased likelihood of the same rating being applied by one or both reviewers (this issue is discussed in further detail in the results). Understanding the limitations of using such a small pool of results, the results were ultimately shared through percentage agreement. As noted by Neuendorf (2002), “coefficients of .90 or greater are nearly always acceptable, .80 or greater is acceptable in most situations, and .70 may be appropriate in some exploratory studies for some indices” (p. 145 as cited by Moore, 2015, p. 26).

The purpose of this phase of the study was to field test the revised rubric using online courses that were already in use by K-12 online learning programs. The reviewers were K-12 online designers and/or K-12 online instructors who were not involved with the second phase of this study (see Table 1).

Table 1. Description of the Four Groups of Reviewers

Group A |

Group B |

Bob (all names are pseudonyms)

Hilary

|

Ashley

Andrea

|

Group C |

Group D |

Donald

Nancy

|

Josh

Sarah

|

Designers and instructors were selected because they were representative of the population who would most likely use the newly revised rubric. The specific sample represented both a convenient and purposeful group of individuals.

As the reliability of an instrument is actually improved upon when the users undergo training (Taggart et al., 2001), the groups were trained in the different areas of measurement as well as the use of the rubric. After each reviewer agreed to participate, they were sent a training packet that included the revised rubric, examples on how to grade specific elements, and a sample course to test the rubric against (see Appendix A for a copy of this training packet). One week later, a Google Hangout meeting was scheduled with each group to discuss the results of their application of the rubric to the sample course.

Upon completion of the meeting, each group received five courses to review. Reviewers had up to two weeks to individually complete the process. Courses reviewed covered core academic areas, as well as electives for both middle school and high school, from two different online course providers5 (see Tables 2 and 3).

Table 2. Types of Courses Reviewed

Grade Level |

Subject Matter |

||||

Elective |

Language Arts |

Mathematics |

Science |

Social Studies |

|

6 |

X |

|

X* |

|

|

7 |

X |

X* |

|

|

X |

8 |

X |

|

|

|

|

9 |

X |

|

|

|

X* |

10 |

|

|

X |

X |

X |

11 |

|

|

X |

X* |

|

X* = Course was designed to fit within multiple areas of middle school (MS) or high school (HS).

Table 3. Courses Reviewed by Groups

|

Subject Matter/School Level |

|||||||||

|

MS Elect |

MS ELA |

MS Math |

MS Sci |

MS SS |

HS Elect |

HS ELA |

HS Math |

HS Sci |

HS SS |

Group A |

X |

X |

|

|

|

|

|

X |

|

X, X |

Group B |

X |

|

|

|

X |

|

X |

X |

X |

|

Group C |

|

X |

X |

|

|

X, X |

|

|

X |

|

Group D |

|

X |

|

|

X |

X, X |

|

|

X |

|

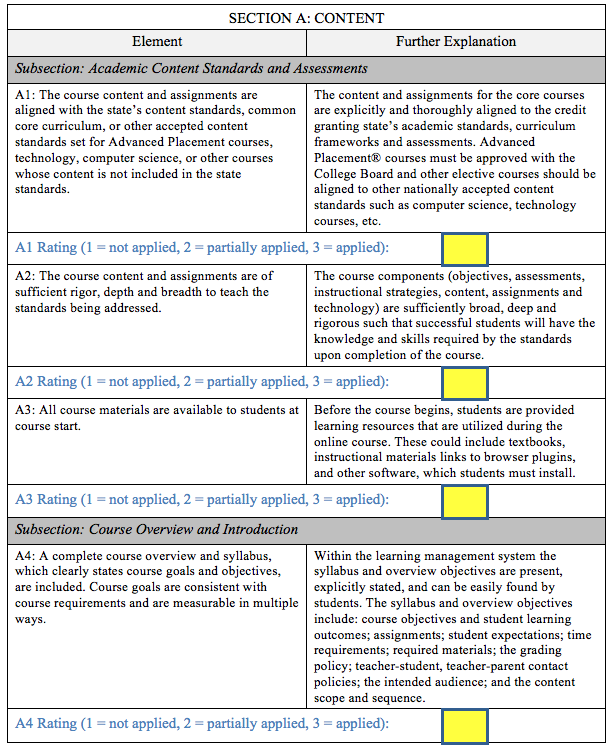

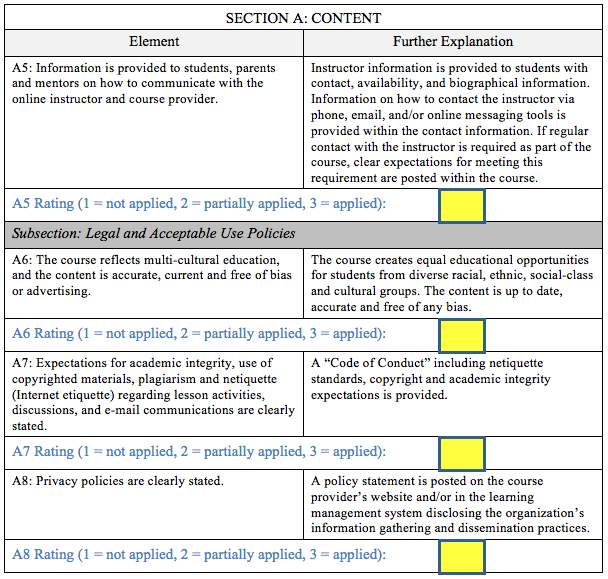

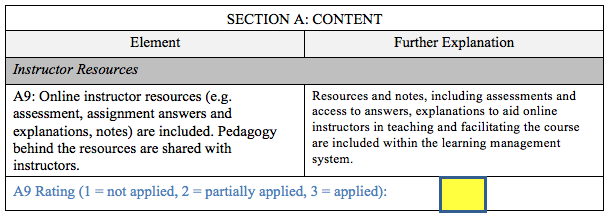

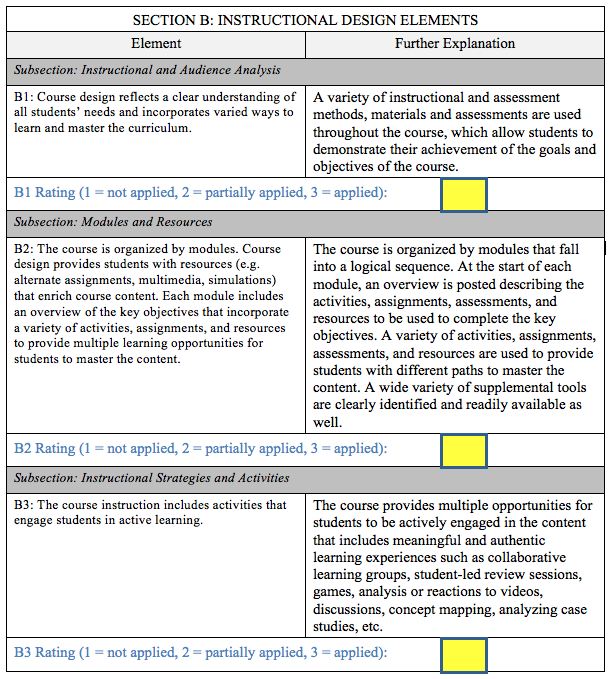

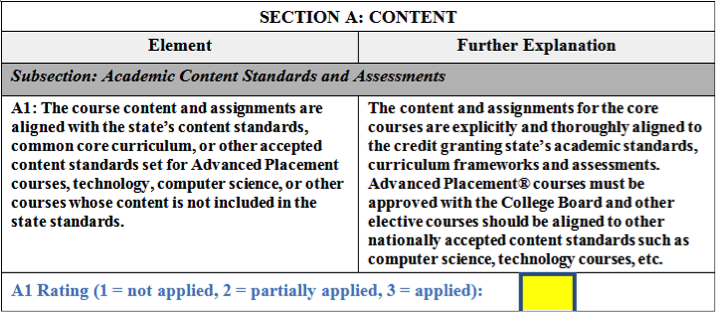

Each group used the revised rubric to review the five courses and rated the measurements on a three-point Likert type scale (see Appendix B). If the element was evident in the course it was rated a ‘3’ for applied, a ‘2’ was for elements that were partially applied, and a rating of ‘1’ meant the element was not applied.

The results between group members were coded using three levels. According to Bresciani, Oakleaf, Kolkhorst, Nebeker, Barlow, Duncan, and Hickmott, (2009), if the rubric is well-designed, even untrained evaluators will find a significant level of agreement. As such, results were tabulated by the size of difference per rating, looking at ‘exact match,’ ‘different by one,’ and ‘different by two.’ Of particular importance were the exact matches as well as those that were different by two. In the latter situation, it would suggest that one reviewer in the group found no evidence of the element while the other believed that it was fully applied.

Results

The results of the field test are presented by section titles as used in the revised rubric.

Section A: Content

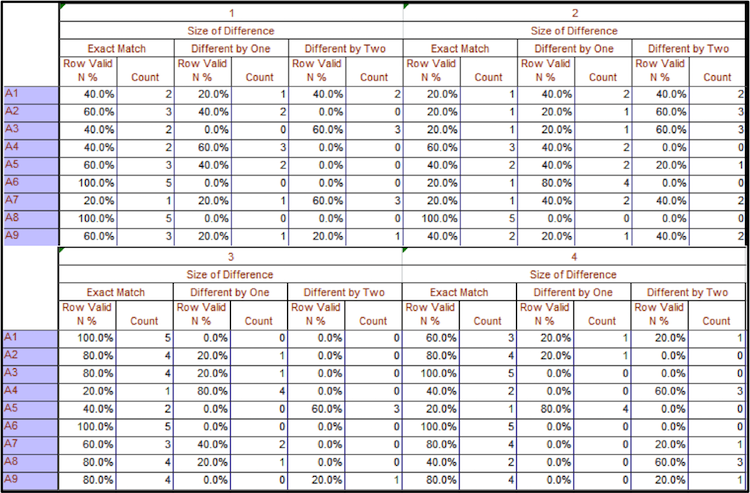

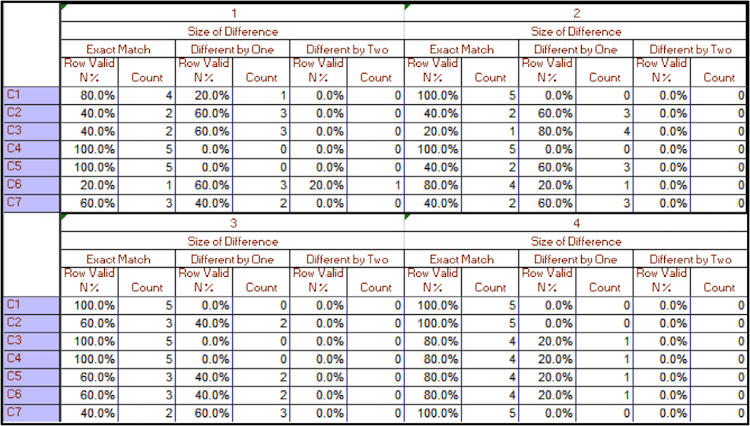

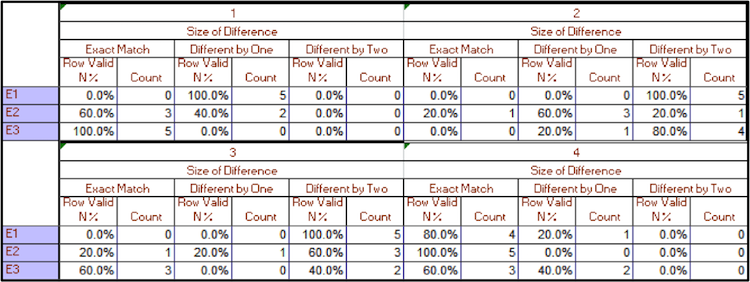

Overall, Section A did not have strong consistency across the groups (see Table 4).

Table 4. Section A Element Size Difference per Group

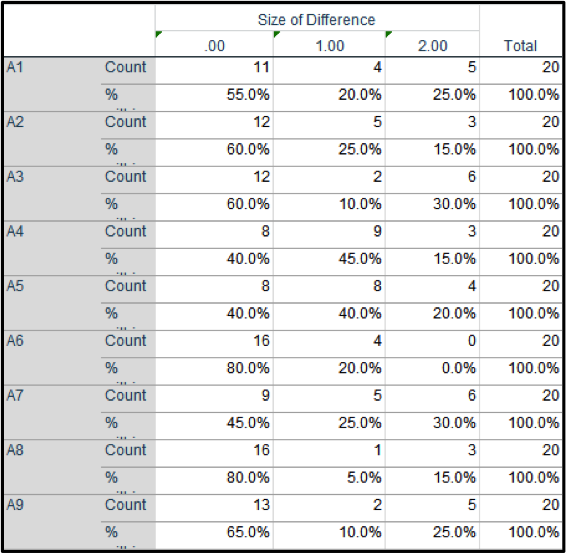

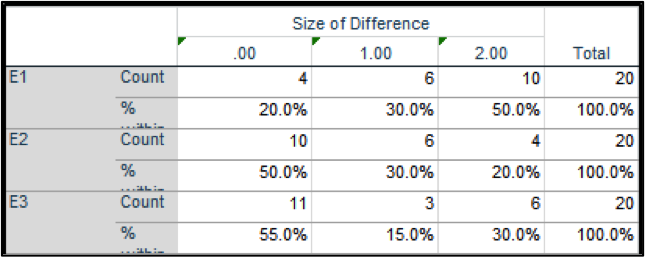

Still, more than half of the ratings were exact matches for groups one, three, and four. Two elements in particular, A6 (i.e., the course is free of bias) and A8 (i.e., privacy policies are stated), scored high – with 80% complete agreement across all groups (see Table 5). Taken as a whole across all groups, Section A had 58% complete agreement.

Table 5. Section A Size Difference Cross Tabulation All Groups

While every group had at least one element from Section A with 60% of the scores off by two, no specific element was off by two with a majority of the groups or all the groups. A3 discussed having materials available at the course start and was flagged by two groups; while A4, A5, A7, and A8 each had one mention.

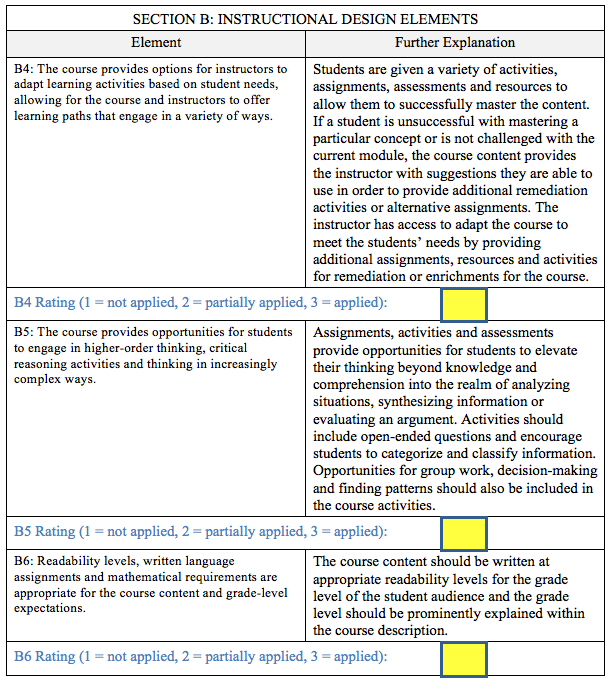

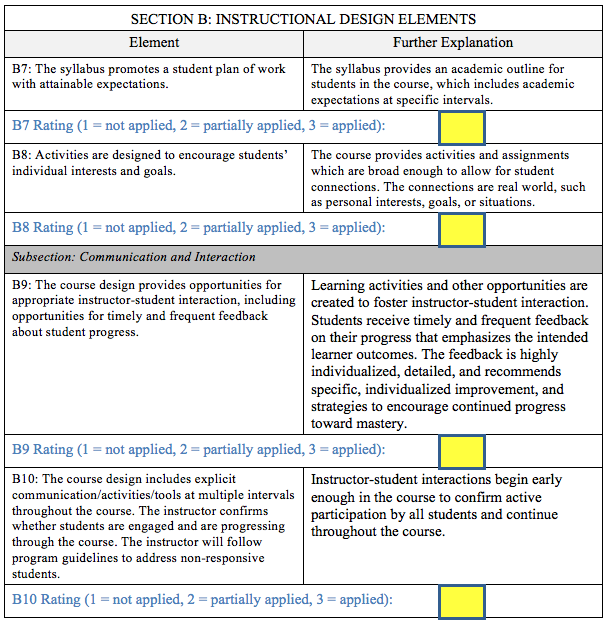

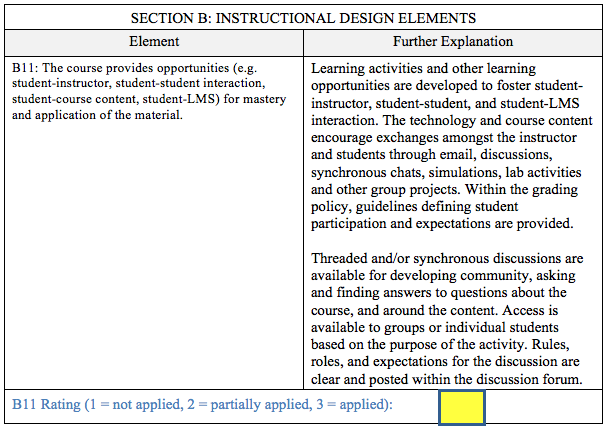

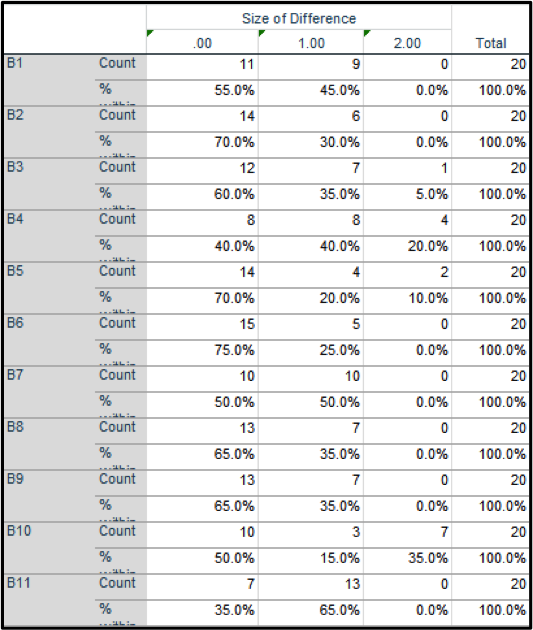

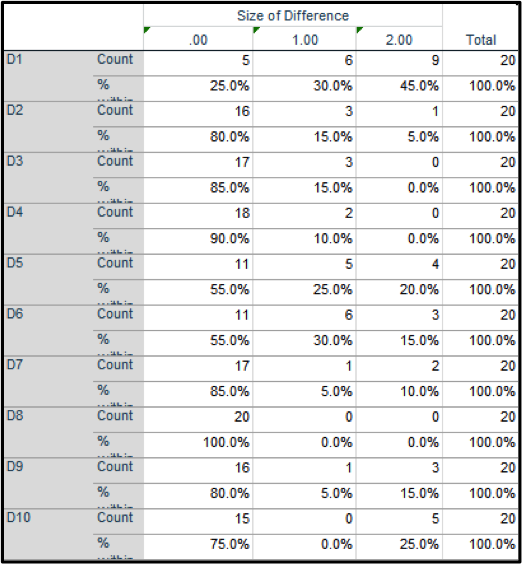

Section B: Instructional Design Elements

Much like Section A, there was not a notable consistency of exact agreement in Section B (see Table 6).

Table 6. Section B Element Size Difference per Group

Three of the four groups once again had over a 50% exact match. Group two was again under 50% for this section. Looking across all groups, Section B had a 57% exact match overall, yet none of the groups attained more than 75% on any given element (see Table 7).

Table 7. Section B Size Difference Cross Tabulation All Groups

There were significantly less ‘different by two’ counts for Section B. B4 attained the 60% threshold with one group. Only B10, which discussed explicit communication, activities, and tools in the course at multiple intervals, had 60% of the scores separated by two numbers for more than one group. Overall, most of the elements fit into the exact match or one off.

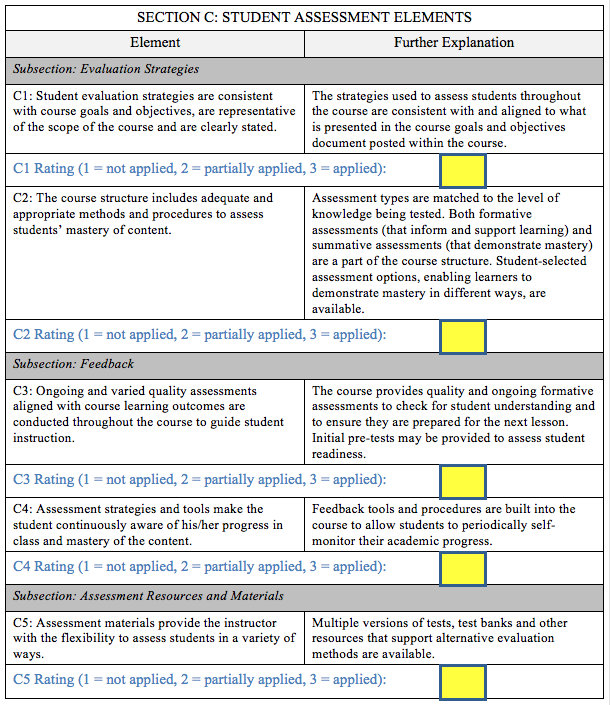

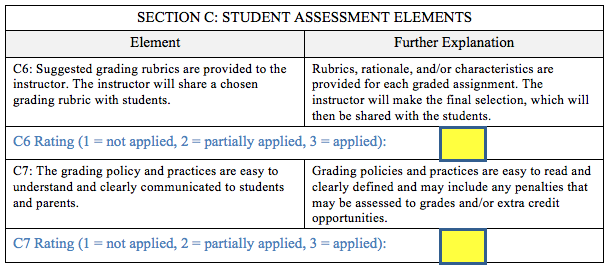

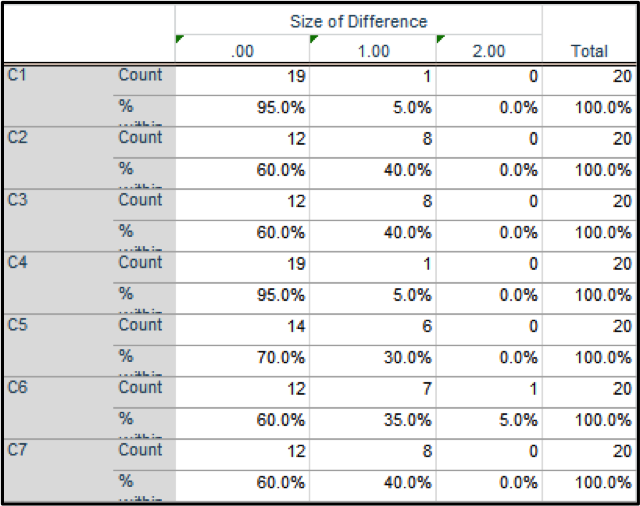

Section C: Student Assessment Elements

The level of inter-rater reliability in Section C significantly improved compared to the prior two sections, with ‘exact match’ being the highest ranking for all four groups (see Table 8).

Table 8. Section C Element Size Difference per Group

C1 (i.e., consistency of student evaluations in regards to goals and objectives) and C4 (i.e., students are continuously aware of progress) were both at 95% exact match across all groups (see Table 9).

Table 9. Section C Size Difference Cross Tabulation All Groups

Overall, the four groups came out with a 71% exact match agreement. Nearly all groups showed results that were an exact match or off by a score of one. C6, which looked for a suggested grading rubric, was the only element that had a pair of scores two apart. This only occurred once, with group one, out of twenty total reviews across all groups.

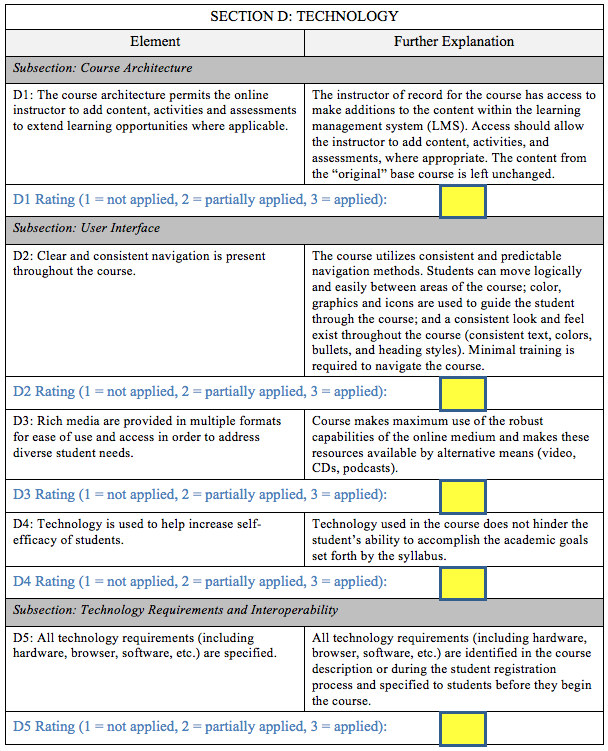

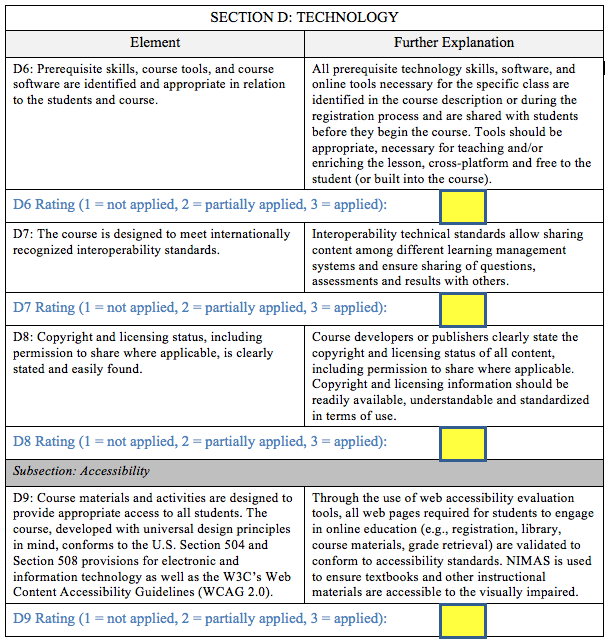

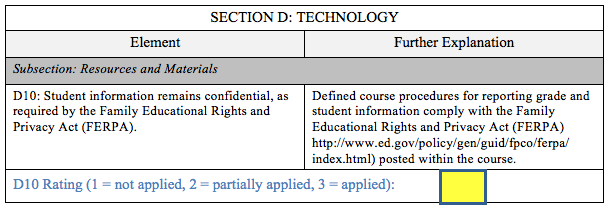

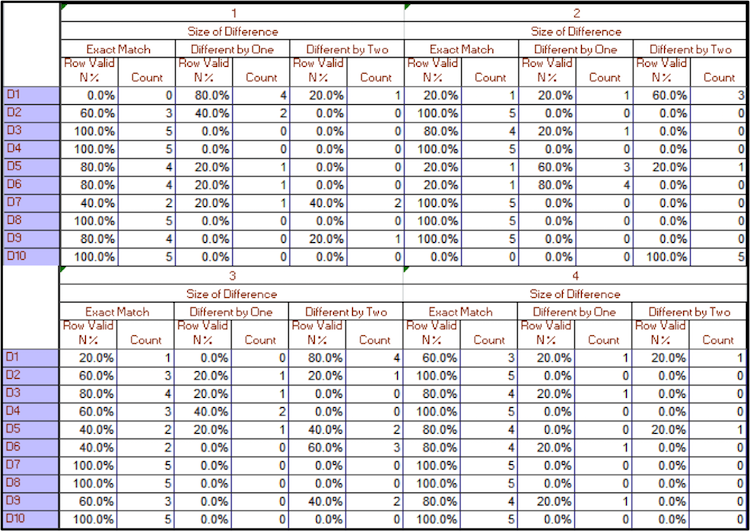

Section D: Technology

The results for Section D were both consistent and inconsistent in comparison to the other sections of the rubric. To start, Section D had high exact match agreements for all four groups (see Table 10).

Table 10. Section D Element Size Difference per Group

For example, element D8, which discussed clearly stated copyright status, was an exact match for all 20 sets of reviews (see Table 11). Seven of the elements had at least a 75% exact match agreement across the groups, putting Section D at 81% overall agreement, the highest level for any section.

Table 9. Section D Size Difference Cross Tabulation All Groups

However, Section D also had a high percentage of ‘different by two’ scores in the individual groups. For example, element D10, which discusses the course following Family Educational Rights and Privacy Act (FERPA) regulations and posting the information, was at 100% disagreement in group two. Group three had 80% disagreement in regards to D1, the element that relates to the course architecture allowing the instructor to add content, activities, and assessments. Looking across all the groups, D1 was at 45% with a score size difference of two.

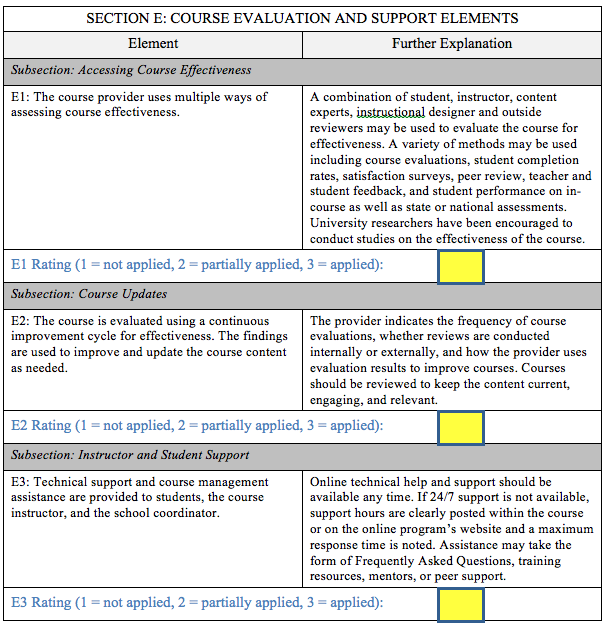

Section E: Course Evaluation and Support Elements

With the lowest element count, Section E also had the lowest exact match scores (see Table 12).

Table 12. Section E Element Size Difference per Group

Only groups 1 and 4 had over 50% exact matches. Across all groups, element E3, making sure the course offers technical support and assistance to the students and instructor, had the highest exact match rating at 55% (see Table 13).

Table 13. Section E Size Difference Cross Tabulation All Groups

Overall, Section E had a 41% exact match agreement. While Groups A and D did not have any two-point size differences, Groups B and C proved troublesome. Both Groups B and C had 100% two-point disagreement for E1, the element that checked for multiple means of assessing course effectiveness. Group B also disagreed on E3, scoring 80% of reviews with a two-point size difference.

Looking at the reviews as a whole, Groups A, C, and D were in exact agreement over 60% of the time (see Table 14), with group 4 at nearly 75%.

Table 14. Overall Size Difference per Group

Group B, however, was under 50% exact agreement. Group B also had the highest two-size difference, sitting at 17%. There are many reasons why Group B was so far off in exact matches, including personal bias or inadequate training from the principle researcher. If exact match were only taken into account, the 62.9% would not be acceptable for reliability.

The overall results had numerous outcomes where there was a difference of two between the scores of the two reviewers. Many of the elements did not feed into opinion and bias (e.g., appropriate course rigor, high variety of learning pathways), but rather were based on whether the item was present or not (e.g., FERPA laws are posted, privacy policies). This would imply muddled course navigation, with some reviewers unable to find important course items. To help negate confusion, designers may use a standard template for their courses, much like those implemented at CDLI (Barbour, 2007b). CDLI designers insisted that navigation should be simple and minimal to avoid confusion (Barbour, 2007a). A basic document, with all the navigational procedures and important document locations outlined, for example, that would also be beneficial for students and instructors (Elbaum et al., 2002). Another option for a course would be to utilize unit checklists of expectations and indicate which should be effectively communicated to relevant stakeholders, including students (Huett et al., 2011).

On the other hand, there were yes/no or simple direction elements (e.g., use of copyright materials) that were close to a 100% exact match. These elements were able to show proper modeling of how to apply the element in a clear and easy to understand fashion. The use of proper modeling is important for a course, since this is a concern not just for instructors but also for the students (Barbour & Adelstein, 2013a). When expectations are modeled correctly, it helps to remove the guesswork behind the meaning (Barbour, 2007a). Explicit expectations and modeling can extend to having a pacing guide that provides a clear overview of the requirements (Huett et al., 2011), which can have a positive impact on all students – including exceptional learners (Keeler et al., 2007).

The iNACOL (2011) National Standards for Quality Online Courses were compared to current literature in phase one (see Adelstein & Barbour, 2016), while an expert panel helped redesign a revised rubric that looked specifically at the course design standards (see Adelstein & Babour, in press). Phase three had K-12 online educators and course designers apply the revised rubric to existing online courses. Four teams of two applied the rubric to five courses each, which allowed the researcher to review the rubric for percentage agreement. This allowed the researcher to test the inter-rater reliability of the revised rubric. While the overall results do not meet a reliability threshold, there are still lessons to take away from the initial field test. The number of instances where there was agreement (i.e., 62.9%) or a differences of only one (i.e., 25%), strongly outweighed the number of instances where the reviewers had a difference of two (i.e., 12.1%). There are individual elements throughout the rubric that met the reliability threshold (i.e., 90% or 80%), while other elements may need to be revised and/or improved. Other considerations, such as bias or elements that were difficult to determine (e.g., course rigor, course assessment), need to be taken into account for the next revision. Overall, the revised rubric provided a narrow focus on course design elements only, which reinforced ideas that are currently promoted in K-12 online education.

To discover the full potential of the revised rubric, further field tests are required to address the limitation of this initial study. One of the limitations was the small number of participants, which limited how inter-rater reliability could be calculated. Adding additional courses for each group, as well as expanding the number of groups, would allow for more reliable results. Another limitation was the use of the revised rubric with existing courses. While using existing courses was an appropriate place to begin the study, a true test would be to design multiple new courses utilizing the revised rubric. This would allow for future studies to compare designer and student opinions between courses created using the revised rubric with courses created using other standards.

__________

David Adelstein, Instructional Technology, Wayne State University. E-mail: dave.adelstein@gmail.com

Michael K. Barbour is a Ph.D. candidate at the University of Georgia. A social studies teacher by training, having taught in the traditional classroom and virtual high school environments, Michael is interested in the use of virtual schools to provide learning opportunities to rural secondary school students. E-mail: mkbarbour@gmail.com

APPENDIX A

Overview

The overall goal will be to create a revised K-12 online course design rubric based on the iNACOL National Standards for Quality Online Courses. The first phase of research was to review the iNACOL standards based on the research literature to determine the level of support within the K-12 online learning literature, as well as the broader online learning literature. For the second phase, eight experts in the field from a variety of sectors examined the standards in regards to course design beginning with the results of phase one. This second phase resulted in a revised list of standards and a revised rubric. In the third phase, 3-5 teams of two reviewers on the application of the revised rubric from phase two.

Volunteers Needed for Phase Three

Phase Three Outline

Phase Three Training

Phase Three Time Commitment

Items to Keep in Mind

Sample Application of Rubric

While the main training will be come in the form of a group course review, this area will show examples of how to use the rubric.

Example #1: Alignment with specific standards

Logging into the course up for review, there is an area labeled Standard Alignment. Looking further, the LMS shows how each of the course areas line up with the state standard ID code:

In this example, element A1 would receive a score of 3 (applied).

Example #2: A complete overview and syllabus

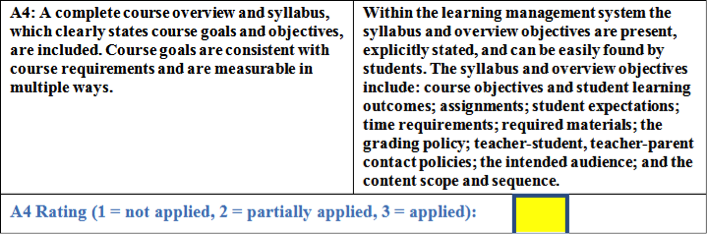

Some elements will have multiple items to look for, such as the one listed below:

Looking at the course in question, there is an included syllabus that clearly states course goals and objectives:

Reviewing the individual lessons, the goals are consistent with the requirements. However, when discussing a complete course overview, there are missing areas. The syllabus does not cover communication and contact policies. It might seem logical to have the teacher in charge of the course add this information on their own but as a reviewer you are strictly looking to see if the element was applied as listed.

In this example, element A4 would receive a 2 (partially applied).

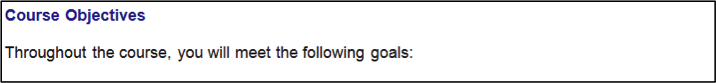

Example #3: Privacy policies

Element A8 asks if privacy policies are posted:

Looking through the included documentation and the course itself, it is clear that the privacy policy is not listed. However, the element text mentions that the policy can be listed on the course provider’s website. As mentioned in example two, your review is limited to strictly the course and the areas of the learning management system (LMS) you have access to. You will not have access to the provider’s website.

In this example, element A8 would receive a 1 (not applied).

Example #4: Students’ needs and a variety of ways to learn

There are elements that look at concepts that are slightly more abstract. A variety of methods, materials, and assessments is not as concrete as locating a syllabus. For example:

Each unit in the course includes a warm-up, instruction, summary, assignment, and quiz. While each unit does show a variety of learning methods (interactive assignments, listening comprehension, reading, etc.), the format does repeat for all units. The assessment methods utilize multiple choice for the majority of the quizzes and exams during this course.

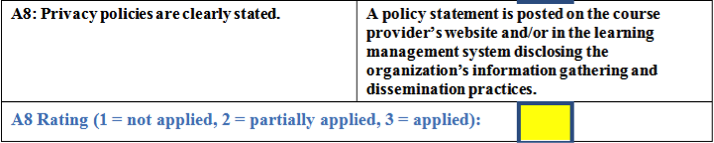

Example #5: Communication opportunities

In some instances, you will need to look outside the course at the LMS itself to review the element:

While the course does not have student-student or student-instructor interaction, the LMS does offer a communications area where email, group discussions, and chats can be set up by the instructor.

In this example, B11 would receive a 3 (applied).

Appendix B